Data vault modeling has gained significant traction in the world of data warehousing, offering a flexible and scalable approach to storing and managing enterprise data. As organizations embrace the benefits of data vault, it becomes crucial to ensure optimal performance in the underlying data platform.

One such platform that has revolutionized cloud data warehousing is the Snowflake Data Cloud.

Snowflake’s unique cloud-based architecture and powerful features make it an ideal choice for implementing data vault models. However, to harness the full potential of Snowflake’s performance capabilities, it is essential to adopt strategies tailored explicitly for data vault modeling.

In this blog, we explore best practices and techniques to optimize Snowflake’s performance for data vault modeling, enabling your organizations to achieve efficient data processing, accelerated query performance, and streamlined ETL workflows.

Understanding Data Vault Modeling

Created in the 1990s by a team at Lockheed Martin, data vault modeling is a hybrid approach that combines traditional relational data warehouse models with newer big data architectures to build a data warehouse for enterprise-scale analytics. It is agile, scalable, no pre-modeling required, and well-suited for fluid designs.

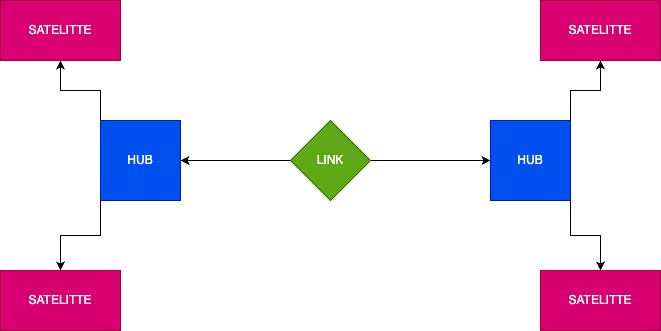

Data vaults are created with three core structures:

Hubs

The table that represents a core business entity within a company

Contain a defined business key and a few mandated data vault fields

Business keys are required to be unique per row

Do not contain any context or details about the business entity

Links

The table that defines a relationship between two or more Hubs

Contains no context or information on the business entity

One row represents the relationship between two entities with their unique business key

Satellites

Tables containing all contextual information about entities

Each row has a unique key, row entry date, and business key to link to the Hub for the entity

What are the Benefits of Using Data Vault Modeling?

Flexibility and adaptability for evolving business requirements

Simplified data integration and agility in data modeling

Incremental loading and historical data tracking capabilities

Enhanced scalability and performance through parallel processing

Challenges in Snowflake Performance for Data Vault Modeling

With its flexible nature and practically infinite storage space, Snowflake is the perfect data warehousing solution for creating a data vault. However, due to the nature of data vault modeling, some performance bottlenecks can be expected for implementation in Snowflake.

Here are some examples of areas to look at when creating your data vault:

Key Type

Unique identifying keys are essential to a data vault architecture, and there are three types of keys that can be utilized in a data vault with various advantages and disadvantages.

Sequential Keys

Sequential keys are an integer for each table that starts at 1 and goes up.

These keys are suitable for query performance but are terrible for load performance as they don’t allow parallel table-loading techniques.

Business Keys

Business keys are related to the business unit and unique to each row.

Unlike sequential keys, these keys allow massively parallel processing (MPP). Still, they are often a combination of several columns to become unique which can slow down performance and increase storage requirements.

Hash Keys

Hash keys combine business keys into a single column using a hash function.

Hash keys provide all key types’ best data load performance, consistency, and audibility. However, joining tables using a hash key can take longer than a sequential key.

Complex ETL Processes

Data vaults consist of a multi-tiered architecture that is scalable and flexible. These layers consist of a Staging Layer with incoming data, a Raw Layer with untouched data, and Business Vaults and Metrics Vaults with data precalculated with business or metric logic.

Because of data vault’s modeling structure, transformation queries for moving data between these layers can become exceedingly complex. This can make it nearly impossible to “handwrite” these SQL queries.

Getting Information in a User-ready Format

Information Marts are where business users will have access to the data. All business rules and logic are applied to these Marts. The queries to apply these business rules and logic can also become very complex in a data vault.

By design, satellite tables are source-specific to a particular business unit, and complex queries are needed to include multiple units in one query result.

Best Practices for Optimizing Snowflake's Performance

Luckily, there are tried and true methods to optimize performance in Snowflake for these challenges.

Hash Keys

Although the query performance for joins could be better with sequential keys than with hash keys, the other benefits far outweigh this failure. Utilizing hash keys provides the ability to efficiently process data loads using MPP while also providing superior query performance over composite business keys.

Data vault architecture emphasizes historical data tracking, allowing for traceability and auditing. Using hash keys makes it easier to track changes over time, as the hash values act as historical references. When a record is modified, a new hash value is generated, allowing for easy identification of changes and the ability to retain a historical record of data transformations.

Point-in-Time (PIT) Tables

A PIT table is a special type of table used to store data snapshots at different points in time. PIT tables can be used to reduce the complexity of queries for business logic and calculations by only presenting the satellite table keys relevant to the point-in-time snapshot for the reporting period required by the business.

If compared to a star schema, this essentially treats the PIT table as a fact table and the satellite tables as the dimension table, vastly simplifying the queries needed for reporting.

Multi-Table Insert (MTI)

Snowflake’s MTI feature can be very advantageous for data vault architecture. The following are the benefits of utilizing MTI:

Efficient Loading

Data vault architecture often involves loading large volumes of data from various sources into different tables. With MTI, you can insert data into multiple target tables with a single SQL statement. This reduces the number of round trips to the database and improves loading performance, especially when dealing with high-volume data ingestion.Atomicity and Consistency

MTI in Snowflake ensures atomicity and consistency during the data loading process. If any part of the multi-table insert fails, the entire operation is rolled back, preventing partial data loading and maintaining data integrity. This is crucial in data vault architecture, as it ensures consistent and reliable data loading across multiple tables.Parallel Loading

Snowflake’s MTI feature supports the parallel loading of data into multiple target tables. It leverages Snowflake’s distributed architecture, automatically distributing and executing the insert operation across multiple compute nodes. This parallelization enhances loading speed and enables efficient utilization of system resources, particularly when loading data into satellite tables in the data vault.Simplified ETL Logic

MTI simplifies the ETL (Extract, Transform, Load) logic for loading data into the data vault. Instead of writing complex logic to handle individual inserts into different tables, you can use a single SQL statement with multiple INSERT clauses to simultaneously load data into multiple tables. This reduces the complexity of the ETL process and improves development efficiency.Reduced Data Movement

Using MTI, you can minimize data movement between the ETL pipeline and the Snowflake database. With the ability to insert data directly into target tables, there is no need to stage data in temporary tables or intermediate storage. This reduces the overall data movement, resulting in faster loading and improved performance.

Here is an example of a basic multi-table insert:

INSERT ALL

INTO sales_data (product, sales_quantity)

INTO inventory_data (product, stock_quantity)

SELECT

product,

sales_quantity,

stock_quantity

FROM temp_data;

Multi-table inserts can also be conditional, writing data to each table based on some criteria:

INSERT ALL

WHEN sales_quantity > 50 THEN

INTO high_sales (product, sales_quantity)

ELSE

INTO low_sales (product, sales_quantity)

SELECT

product,

sales_quantity,

FROM temp_sales_data;

Modeling Tools

To help with the complex ETL queries for data vault architectures, it’s recommended to use a data modeling tool such as dbt. Modeling tools provide a visual representation of the data vault model, including Hubs, Links, Satellites, and PIT tables.

This visual representation helps ETL developers and architects understand the structure and relationships of the data vault entities, facilitating the design and development of ETL processes.

Some modeling tools generate ETL code based on the defined mappings and transformations. This code generation feature automates the generation of ETL scripts or jobs, reducing manual effort and ensuring consistency and accuracy in the ETL processes. Generated code can be further customized or integrated into existing ETL frameworks as needed.

Advanced Strategies for Enhancing Snowflake Performance

Clustering

Clustering can be used in a data vault architecture to improve query performance and reduce disk I/O. However, not all scenarios benefit from clustering. Clustering should not be used on hash keys, for instance, as it doesn’t give any performance benefit. This takes clustering out of the equation for hubs, links, and satellites. However, if utilizing a PIT table, you can benefit from clustering by the PIT date.

Example of creating a clustering key on a PIT table:

CREATE OR REPLACE TABLE product_pit (

hub_employee_key VARCHAR(20),

pit_load_dts DATETIME,

name_load_dts DATETIME,

desc_load_dts DATETIME

) CLUSTER BY (pit_load_dts, hub_employee_key);

Materialized Views and Dynamic Tables

Materialized views can be used to increase performance in a data vault architecture by precomputing and storing the results of complex or frequently executed queries. Materialized views can help optimize complex queries by providing alternative access paths or optimized data structures.

Snowflake’s query optimizer can utilize materialized views to rewrite queries automatically and leverage the pre-computed results for better performance. This reduces the need for manual query optimization and improves overall query execution time.

Dynamic tables can be used in a similar fashion to increase performance. Unlike materialized views, a dynamic table’s SQL query can be more complex, including joins between multiple tables. However, Snowflake’s query optimizer will not automatically utilize dynamic tables to improve performance.

CREATE DYNAMIC TABLE dwh.dv_customer_order_pit

lag = '1 min'

warehouse = vault_wh

AS

SELECT

co.customer_key,

co.order_key,

co.order_date,

co.order_total,

ROW_NUMBER() OVER (PARTITION BY co.customer_key ORDER BY co.order_date DESC) AS row_number

FROM dwh.staging_customer_order co

JOIN dwh.dv_customer c ON co.customer_key = c.customer_key;

Performance Monitoring Tools

Snowflake provides several performance monitoring and optimization tools to help users analyze and enhance the performance of their queries and workloads. For instance, the Snowflake Query Profile provides detailed information about the execution of a specific query.

It includes query execution statistics, such as execution time, resource usage, data scanned, and stages involved. Query Profiles can be accessed through the Snowflake web interface or retrieved programmatically using Snowflake’s Query ID or other identifiers.

Snowflake also offers utilities such as the Snowflake Worksheet and SnowCD (Snowflake’s Performance Tuning Advisor) to help analyze query performance and provide recommendations for optimization. These tools analyze query execution plans, data distribution, and query patterns to suggest improvements and optimize query performance.

In addition to Snowflake’s native utilities, phData has created the Advisor Tool that works specifically with Snowflake. With the Advisor Tool, you can quickly and easily identify opportunities to improve the configuration, security, performance, and efficiency of your Snowflake environment.

Closing

Optimizing Snowflake’s performance for data vault modeling is a crucial step towards achieving efficient data integration, accelerated query performance, and streamlined ETL workflows. By implementing the best practices and strategies outlined in this blog, organizations can unlock the full potential of their data vault architecture in Snowflake.

Remember that performance optimization is an ongoing process. Continuously monitoring query execution, analyzing query plans, and staying up-to-date with Snowflake’s latest features and enhancements will ensure your data vault model performs at its best. Regularly evaluating and fine-tuning your Snowflake environment, schema design, query execution, and ETL processes will keep your data vault architecture running smoothly, adapting to changing business needs and data demands.

If you have any other questions about optimizing Snowflake’s performance for data vault modeling, please contact our team of Snowflake experts!

FAQs

A star schema and a Data Vault are two different approaches to data modeling and data warehousing, each with its own characteristics and benefits. A star schema is a widely used dimensional data model commonly found in traditional data warehousing. Its key features are simplicity, denormalized structure, and aggregation of data, which are especially useful for data analytics. A Data Vault is a data modeling methodology and architecture specifically designed for enterprise data warehouses. It focuses on providing a flexible and scalable approach for integrating and storing data from various sources.

As a relatively newer data warehouse methodology, building out a Data Vault for the first time can feel daunting. The first step is to learn about the concepts of Data Vault. This blog is an excellent place to start.