Data ingestion is one of the most crucial steps in the ELT/ETL (Extract, Load, Transform/Extract, Transform, Load) procedure. It is impossible to perform any transformation without the availability and proper ingestion of the data.

The data ingestion pipelines connect your databases and tools to your designated data warehouse, which also acts as the hub for stacking the real data. The processes set up for ingesting data to the warehouse set the standards for all the processes in the data team.

Today’s organizations rely heavily on data for predicting trends, market forecasting, planning for future requirements, understanding consumers, and making business decisions. To accomplish these tasks, it is essential to have fast access to reliable enterprise data in a single place. The process of data ingestion into the Snowflake Data Cloud makes all of this possible.

This article will cover the Most Popular Native Methods for Data Ingestion in Snowflake, as well as best practices to simplify the process and insights for addressing data ingestion challenges.

What is Data Ingestion?

Data ingestion is the process of absorbing a large amount of data from across several sources and transferring them to the target site where it can be deposited and analyzed. The destinations (or target sites) are usually a database, document store, data mart, data warehouse, etc.

There are a variety of data source options that can be utilized for this process, like web scraping or extracting web data, spreadsheets, SaaS data, or in-house apps.

Enterprise data is generally stored in various formats and sources and managed by multiple users. For example, sales data is often stored in Salesforce.com, whereas product information might be stored in relational database management systems (DBMS). Multiple locations with multiple users often result in disorganized data. This makes the case for data ingestion even stronger.

What is Snowpipe?

Snowpipe is a tool in Snowflake that allows for quick and simple data ingestion. With Snowpipe, data can be loaded from files as soon as they are available in a stage. With a serverless compute model, Snowflake is able to manage the load capacity needed to meet the demand, while ensuring optimum compute resources.

How Does Snowpipe Ingest Data?

When creating a Snowpipe, the user can designate the fashion in which the data is loaded and the COPY statement will then auto-identify the source and target. Semi-structured data types like JSON or AVRO are also supported, making Snowpipe very flexible and efficient. Generally speaking, there are two main ways to identify the data to be ingested into Snowflake.

Cloud Messaging Snowpipe Automation: In automated data loads, the event notifications from the available cloud storage services are utilized to inform Snowpipe about the availability of new data for ingestion. Snowpipe then copies the files into a queue which gets loaded into the target or tables in a serverless, continuous fashion as specified by the parameters in the pipe object.

Snowpipe REST Endpoints: Alternatively, public endpoints ( file names within a list of data) can be noted in the pipe object to designate where the data ingestion should start and stop. When new data files matching the list are discovered and referenced through the object, they become queued so they can be loaded. Then the data gets loaded from the queue into the Snowflake tables as specified in the parameters defined in the pipe.

For the most cost-effective and efficient experience with data ingestion through Snowpipe with Snowflake, you should follow this documentation on Snowflake’s official website. Following this approach will ensure an optimal balance between performance and cost.

What are the Different Types of Data Ingestion Methods in Snowflake?

The different methods of data ingestion offer varying benefits for the organization. Having the ability to choose a preferred method allows organizations to manage data ingestion in a way that best enables them to perform data analysis and address business challenges.

Two of the Most Popular Methods for Data Ingestion in Snowflake are batch loading and streaming data ingestion. The chosen method will depend on the business requirements of the user’s organization.

Streaming Data Ingestion

Also referred to as real-time streaming, this data ingestion method is most helpful in cases where the data collection process is extremely time-sensitive. In streaming data ingestion, the data is extracted, then processed, and finally stored; as needed for the purpose of real-time decision-making.

Pros:

- Helps companies in gaining insights instantly

- Helps organizations to make informed decisions at a quicker pace

- Boosts the agility of business operations

- Addresses operational issues quickly

- Enhances the overall customer experience dynamically

Cons:

- Because data is dynamic and unpredictable, extensive error checking is required before data storage

- There is no fixed rate of ingestion and without proper data retention, more storage space may be used

Batch Ingestion

This method is recommended for repeatable procedures like consistent daily reports. When the ingestion process happens in batches, the data can be moved at recurrently-scheduled intervals.

Pros:

- This process helps process a large amount of data at a time, including historical data

- Data types and volumes are known in advance and predictable long-term

Cons:

- The entire job might be unsuccessful if there is an error in the data or the batch size is not properly configured

Best Practices for Simplifying the Data Ingestion Process

1. Remain flexible and dynamic to alternate data ingestion methods

As mentioned above, there are two key methods of data ingestion through Snowpipe into Snowflake. Each has its benefits in varying scenarios. Data ingestion is a dynamic procedure. Consider all aspects of the project before determining which method of data ingestion is ideal.

Utilizing both methods regularly will ensure users remain well-versed on both procedures and acutely in tune with when and how each method can optimize the data ingestion processes.

2. Anticipate difficulties and plan accordingly

The prerequisite of data analysis is transforming the same data into the appropriate usable form. As the volume of the data increases, it becomes more and more difficult to process the data. Proper data discovery should be performed in advance to ensure the volume and type of data, the source of the data, the compatibility of the column data types, and the target destination.

Thankfully, Snowflake is compatible with even legacy Data Warehouses, which will require a special focus on the size of the data type that will ultimately guide the user in deciding the destination Snowflake account.

3. Automate the procedure by enabling the self-service feature

A business may require ingesting data from several sources at any given time. If a company is working at a centralized level, then there may be trouble in executing each unique request. Automating the procedure in this scenario empowers individual business users to handle their specific procedure on their own with little or no necessary intervention.

As the volume and complexity of data increase, manual data ingestion techniques risk becoming unreliable. Automating data ingestion saves time, increases productivity, and reduces errors.

Data Ingestion Tools in Snowflake

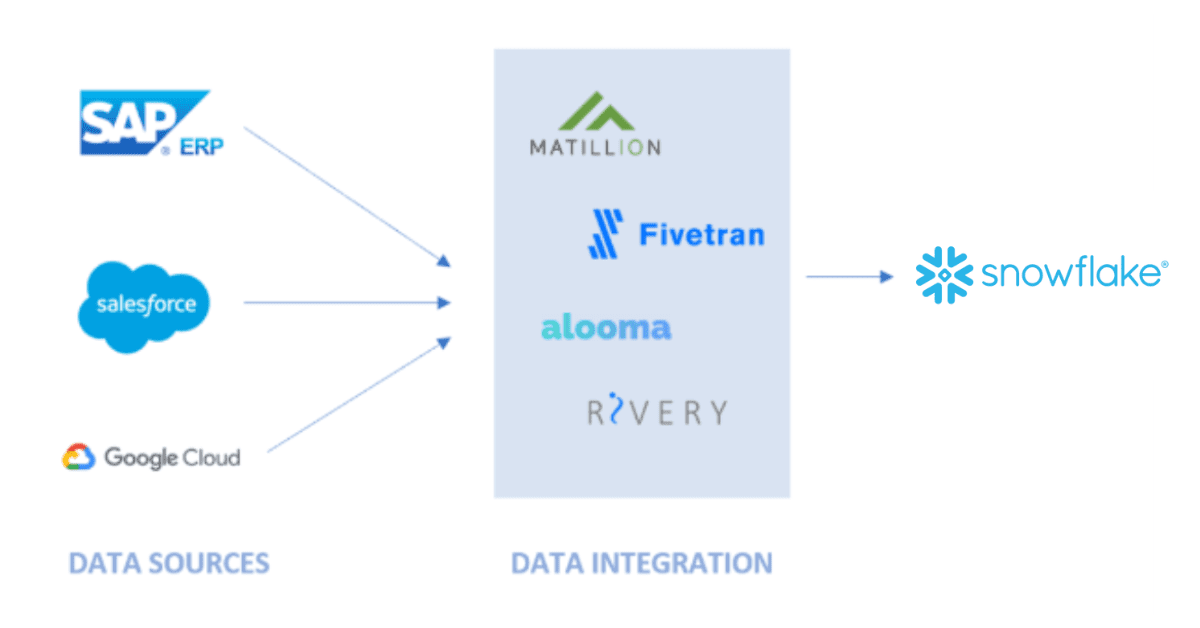

Having various data sources across an organization with various requirements can pose a major challenge. The data integration process involves accumulating the data from various sources and then enabling the users to process it for data ingestion. Snowflake can operate with several data integration tools like Fivetran, Alooma, Matillion, Stitch, etc.

In a future blog, we’ll cover the best data integration tools for Snowflake, so stay tuned!

Closing

After reading this article, you should have a clearer idea of what data ingestion is and the efficiencies behind it. Data ingestion tools can facilitate businesses in their strategic decision-making and improve business intelligence. This reduces the overall complexity of collecting data from the various sources together and allows you to work with the different types of data and schema.

Moreover, an efficient procedure of data ingestion can provide certain actionable insights from the collected data in a well-organized and straightforward manner. Following data ingestion practices that rely on self-service, automation, and focus on proper data discovery ahead of time will produce a fast, seamless, error-free, and dynamic data ingestion process.

Looking to succeed with Snowflake? As the 2022 Snowflake Partner of the Year, phData can help your organization set up your entire Snowflake environment from scratch in the most efficient and effective way possible. Reach out today to learn more!

FAQs

Snowflake provides the facility of ingesting real-time data, integrating data, and simplifying data usage. For example, Snowflake Kafta Connector reads the data from one or more Kafta topics and loads the data in an organized Snowflake table.

This is a procedure for data transportation from different sources to one data processing or storage repository tool. There are different ways of designing a framework based on various architectures and models. Data ingestion can be conducted in batch or by streaming (real-time).