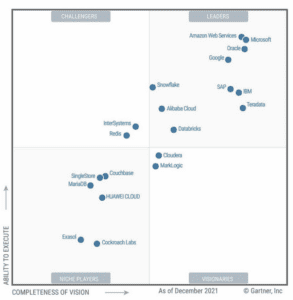

The world of business intelligence and data modernization has never been more competitive than it is today. The landscape of companies competing in this very hot industry is ever-changing, with some technologies separating themselves with their innovation and ease of use.

At phData we have the luxury of working with a diverse array of clients differing in industry, location, and size. Our visibility into a swath of different companies allows us to gauge the market for different emerging technologies and analyze the current technology landscape.

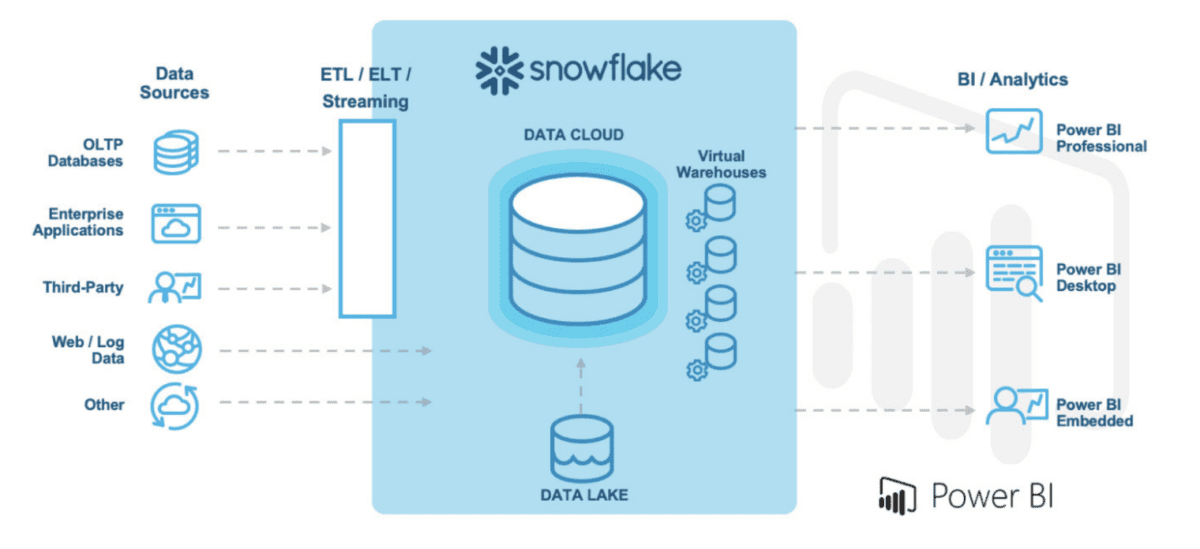

Two of the platforms that we see emerging as a popular combination of data warehousing and business intelligence are the Snowflake Data Cloud and Power BI.

In the market, phData has seen Snowflake and Power BI become more common as a data platform and data visualization pairing that provides best-in-class capabilities from both Snowflake and Microsoft.

This guide will provide context behind the relationship between the platforms, uncover a few common misconceptions, and explore some best practices for deploying Snowflake and Power BI together.

*Note: It is important to call out that selecting tools for your analytics needs is just a smaller component of creating and implementing an analytics strategy. Much of what is discussed in this guide will assume some level of analytics strategy has been considered and/or defined.

Snowflake is a cloud computing–based data cloud company that provides data warehousing services that are far more scalable and flexible than traditional data warehousing products.

Having gone public in 2020 with the largest tech IPO in history, Snowflake continues to grow rapidly as organizations move to the cloud for their data warehousing needs.

Power BI is a data visualization and analysis tool that is one of the four tools within Microsoft’s Power Platform. Debuting in 2015, Power BI has undergone meaningful updates that have made it a leader not just in data visualization, but in the business intelligence space as well.

Microsoft Power BI has been the leader in the analytics and business intelligence platforms category for several years running.

Snowflake and Power BI are both market and thought leaders in their respective industries with each leading in innovations and growth amongst BI and data modernization platforms. Because of this, they are a common combination for companies when selecting tools for their analytics needs.

Now you might be asking, “if both of these tools are widely adopted, what’s the issue?”

Here’s where things get fun, let’s dive in!

Despite dozens of clients and countless projects leveraging Snowflake and Power BI, there is still some resistance from both clients and vendors to using the two platforms together.

One big issue that contributes to this resistance is that although Snowflake is a great cloud data warehousing platform, Microsoft has a data warehousing tool of its own called Synapse.

In a perfect world, Microsoft would have clients push even more storage and compute to its Azure Synapse platform.

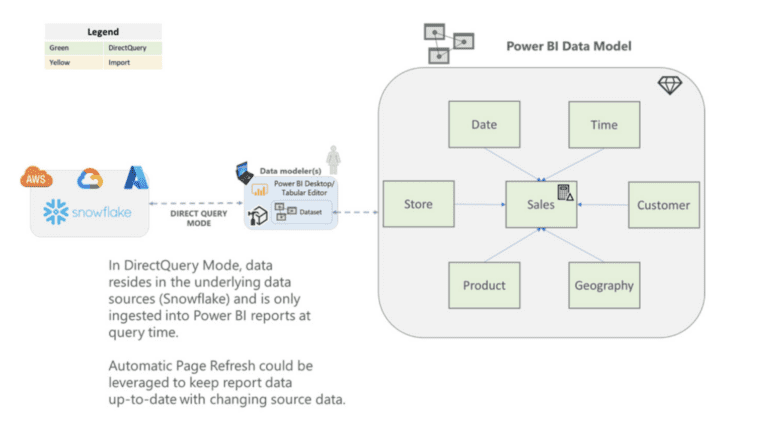

On the other hand, Snowflake wants to drive as much storage and compute onto their platform as possible too. One of the easiest ways for Snowflake to achieve this is to have analytics solutions query their data warehouse in real-time (also known as DirectQuery). This ensures the maximum amount of Snowflake consumption possible.

While Microsoft might be fine with your organization using Snowflake – especially when Snowflake is deployed on Azure – Power BI is most efficiently used when running its reports on an imported or cached model of the data, which limits Snowflake’s consumption.

We’ll get into some more details in this guide on why that is, but for now, this context should help you understand how the two companies view one another in the market.

As discussed in a previous section, there are a lot of complexities that can arise when using these two platforms, along with some misconceptions. In this section, we are going to discuss some of these items and dive deep into whether these issues have any merit.

Our Rating: False

This is actually one of the most common misconceptions that we encounter about using Snowflake and Power BI. Microsoft has developed a native connector for Power BI that allows developers to choose Import or DirectQuery mode as well as the capability to utilize Azure AD Single Sign On (SSO) with Snowflake.

Even though at face value the claim is outright false, there is some important historical context from which it originates.

Snowflake was originally launched in October 2014, but it wasn’t until 2018 that Snowflake became available on Azure. At first, the only way to connect to Snowflake from Power BI was via an ODBC connection.

The December 2019 release of Power BI Desktop introduced a native Snowflake connector that supported SSO and did not require driver installation. The release of the native connector was only four years ago at the time of this guide’s writing.

The June 2021 release of Power BI Desktop introduced Custom SQL queries to Snowflake in DirectQuery mode. Although this feature brought Power BI up to par with some of its competitors, this lag in integration with Snowflake introduced ambiguity for a lot of people about whether Microsoft was interested in making their platforms work with Snowflake.

Our Rating: Somewhat True

Microsoft would obviously prefer that clients use Azure Synapse Analytics over other cloud tools such as Snowflake. However, Snowflake runs better on Azure than it does on AWS – so even though it’s not the ideal situation, Microsoft still sees Azure consumption when organizations host Snowflake on Azure.

With Azure being a common landing spot for Snowflake, the term “frenemy” is appropriate here, especially if the client is using other Microsoft tools. Both companies seem to recognize this “necessary evil” dynamic as they continue to be partners as of 2022.

Our Rating: Somewhat True

Although this claim is fairly misleading, it is true that the amount of DAX functions available to users is limited when using DirectQuery instead of Import mode. More importantly users must understand that this limitation is not unique to Snowflake.

The most commonly used functions that you lose when using DirectQuery are Time Intelligence functions such as TOTALYTD, DATESYTD, and EOMONTH. To get around losing Time Intelligence functions, a robust calendar table is suggested to reference for time-based metrics.

While the loss of certain DAX functions is definitely a shortcoming that we hope Microsoft will address in the near future, the impact of these lost DAX functions is not necessarily as big as you would expect.

Our Rating: True, but getting less true

The way that Power BI constructs queries to be sent back to the source data to render the visuals does throttle DirectQuery connections, although this throttling is not unique to Snowflake. This is one of the many reasons that Power BI is more efficient when using Import mode, a cached dataset hosted in Power BI Service.

The good news is that the Snowflake and Microsoft teams both agree that this is an issue and have recently worked together to make improvements to this limitation.

When Power BI loads a visual in DirectQuery, it sends several queries back to the source data instead of a single query. This causes more queries being needed to render a report. However, with the improvements made by Snowflake and Microsoft together with Query Parallelization, users can now alter the number of concurrent queries sent back to the data source. Query Parallelization doesn’t reduce the amount of queries sent, but allows the user to increase how many are packaged together and sent at the same time versus sequentially.

Similar to Query Parallelization, Microsoft introduced Horizontal Fusion in September of 2022. Essentially, Horizontal Fusion reduces multiple queries that have a similar shape into a one query. Power BI then sends that singular complex query back to Snowflake source eliminating any duplicative trips back to Snowflake.

As the previous section suggests, there are some complexities when using Power BI with Snowflake. Although using Power BI with Snowflake is similar to using Power BI with other data sources, we do have some specific suggestions when using the two tools together, especially since the use of DirectQuery will be top of mind.

You can connect Power BI to Snowflake just like you can connect Power BI to any other database using the native connector that was released in 2019. Prior to this, an ODBC connector was the only way to connect to Snowflake from Power BI.

In 2021, Microsoft enabled Custom SQL queries to be run to Snowflake in DirectQuery mode further enhancing the connection capabilities between the platforms.

Using the native connector is not only easier for Power BI users to connect to Snowflake, but it also enables Azure AD SSO to be enabled.

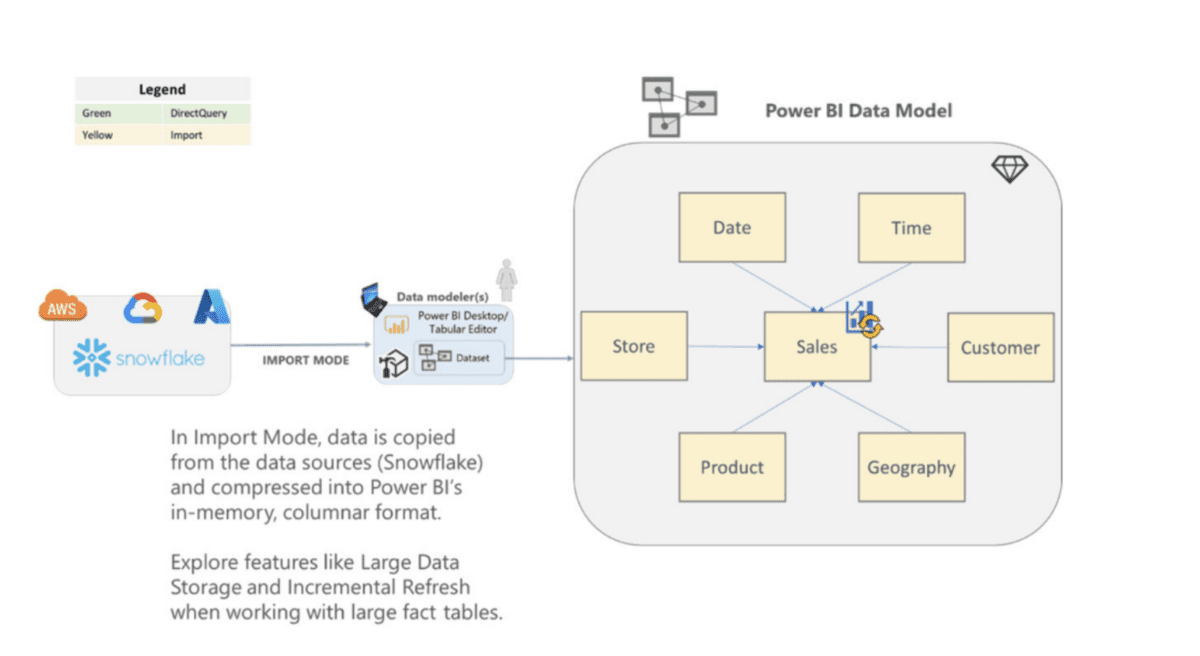

Power BI offers two main connection types when connecting to data sources, Import and DirectQuery. We will explain the benefits and opportunities for both, when to use one over the other, and when to pair them in a composite model.

Import mode is the most common connection type we see when working with customers. Importing data allows you to ingest a copy of the source data into an in-memory database. From there, Power BI’s underlying storage engine, VertiPaq, provides significant compression capabilities.

The VertiPaq engine is columnar based which is different from a basic SQL server, which traditionally computes on rows and offers slow performance.

VertiPaq can compress data in multiple ways that store the data into memory, speeding up DAX calculations significantly. A general rule of thumb is that you can typically expect about 10x compression when importing data into Power BI.

Since Import mode creates a cache of the dataset, a refresh schedule will need to be set up to keep data current. Refreshes of large datasets will need thoughtful up-front planning. Features like Power BI Premium Large Dataset Storage and Incremental Refresh should be considered for importing large data volumes.

You can also connect to Snowflake with Power BI using DirectQuery mode. Although a majority of use cases for tools like Tableau or Power BI rely on cached data, use cases like near real-time reporting need to utilize direct queries.

In late 2021, Power BI introduced custom SQL queries to Snowflake using DirectQuery. The connector is built in such a way that the input SQL statement is formatted as Snowflake SQL when the command is submitted, so users only need to write their query in standard SQL.

Another thing to note is that you will need to include the fully qualified table name in the query to ensure it connects successfully. See below for an example:

select *

from table(information_schema.task_history(scheduled_time_range_start=>dateadd(‘hour’, -1,

current_timestamp()),task_name=>’load’))

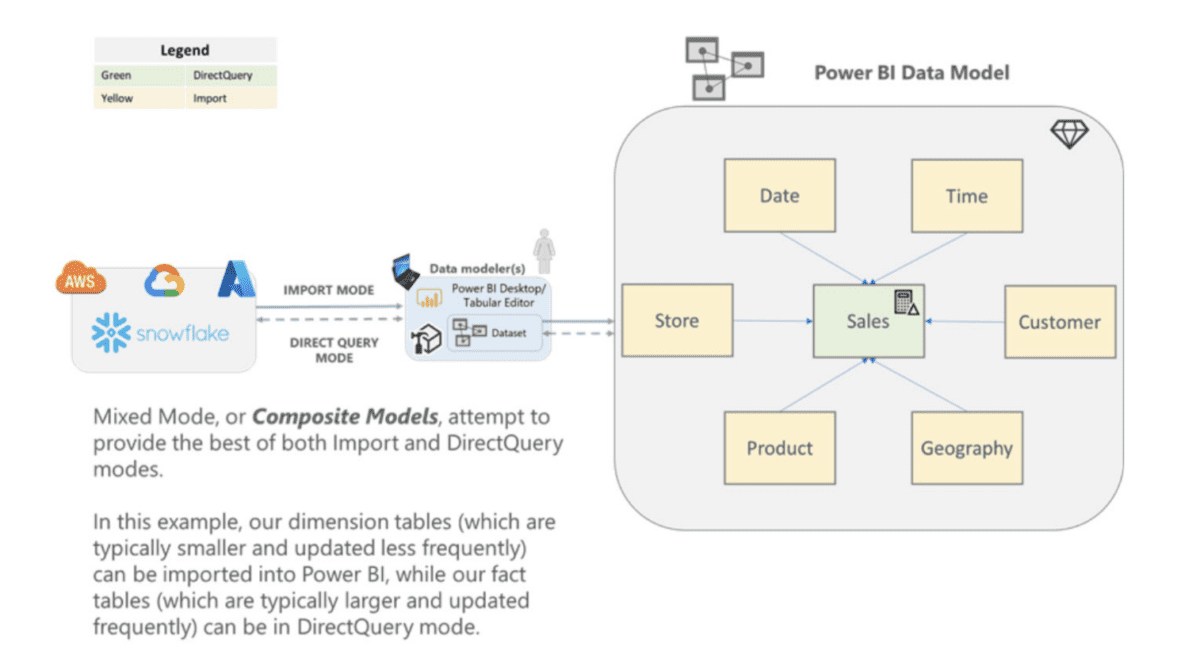

For those users who want to leverage the best of Import and DirectQuery methods, Microsoft now offers composite modes. In a composite model, the storage mode can be configured differently for each table. With Snowflake, we recommend setting the largest, most frequently updated fact tables as DirectQuery connections, and the rest as Import or dual mode. This way, each of your contextual data points used for filtering are stored in memory.

This permits you to only pull the data necessary to make decisions while allowing you to stay updated on the latest information and maintain report performance levels.

Using a composite model could result in additional consumption of Snowflake queries when compared to Tableau, as Tableau does not have the option to create a composite model.

Because Tableau only has the option of all Extract or all DirectQuery within their data sets, there may be situations where the Tableau developer chooses to Extract their data where the Power BI developer could utilize a composite model. Even with a composite model, the same respective considerations for Import and DirectQuery hold true.

For more information on composite models, check out Microsoft’s official documentation.

At phData, we suggest using whatever connection method is best for our client’s specific use case, although selecting DirectQuery as the connection mode when unnecessary can often lead to slower performance of reports and dashboards.

In Import mode, Power BI efficiently caches the data and can often lead to better report performance. On the other hand, when the data is rapidly changing, not using DirectQuery in your fact tables can result in decisions being made based on data that is not up to date.

It is important to note that this methodology is generally tool agnostic and is recommended by Tableau and other BI platforms as well.

Once you have chosen the method to connect to your data (Import, DirectQuery, Composite), you will need to make sure that you create an efficient and optimized data model. Creating an efficient data model can be the difference between having good or bad performance, especially when using DirectQuery.

Here are some of our best practices for building data models in Power BI to optimize your Snowflake experience:

1. Use a STAR schema – A star schema will simplify joins and queries, process transactions faster, and allows for continuous development as new data becomes available without worrying about changing the granularity of the entire source. We recommend utilizing Snowflake to build out the necessary views and tables for your comprehensive data models. These tables and views that you build will be pieced together to create the enterprise reporting data models that will ultimately be used to feed your Power BI reporting.

2. Use only the necessary data in your model – Limiting the columns in your data model is almost always more impactful to the model’s performance than limiting the number of rows. Whether you limit the columns in your Snowflake reporting view or use Power Query, unnecessary data will slow rendering and data refresh times.

3. Use materialized views or aggregated tables where possible – It is best practice to create materialized views for your reporting needs rather than querying the actual source tables in your production environment. If your reporting does not require your data to be at its lowest level of granularity, consider aggregating your data to the required level of granularity to reduce the amount of data being pulled in and the subsequent workloads that would otherwise be necessary to aggregate the data once in Power BI Desktop.

4. Hide the unnecessary relationship fields – Once you have built your data model in Power BI, hiding the field in your relationships that you don’t want users to have access to will help with confusion by your end users.

5. Assume referential integrity in relationships – Choosing the “Assume Referential Integrity” option will streamline the queries sent between tables in a relationship and will result in quicker render times. This tactic can be used in all connection modes but is especially impactful in DirectQuery mode.

6. Avoid bi-directional filtering – Enabling bi-directional filtering in your relationships not only makes your data model more complex, but it can also cause undesired end-user behavior.

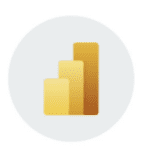

If you use the native Snowflake connector in Power BI Desktop, you will be able to set up Azure AD SSO with Snowflake. Setting up Azure AD SSO allows you to use the security rules that are set up in Snowflake – otherwise, you would rely strictly on controlling access via Power BI.

Setting up Azure AD SSO between Power BI and Snowflake will require actions in three different areas:

In Azure Portal you’ll need to set up Snowflake in your Applications menu in order to get the security token to then give Snowflake

In Snowflake you’ll need to use the Azure token to create a mapping of Azure AD to Snowflake usernames. This script is provided in Snowflake’s documentation

In the Power BI tenant settings you’ll need to enable Snowflake SSO as well as set up any necessary data sources using gateways

As shown in Snowflake’s documentation on this topic, you can see how the Azure AD process passes your Microsoft credentials to Snowflake to allow for authentication.

Many organizations use On-Premises Gateways for cloud-to-cloud connections for the added layer of security. If you’re using On-Premises Gateways to connect Power BI to your Snowflake data sources, make sure to get the right Azure VMs to host the gateways. Now you might be asking, why would someone use an On-Premises Gateway with a cloud-to-cloud connection?

There are two scenarios that we see that call for this configuration:

Different VMs are meant for different types of jobs. Make sure to find the right one for your use case. Here are some considerations for choosing the right VM:

There are also different types of Azure Virtual Machines that you can choose from:

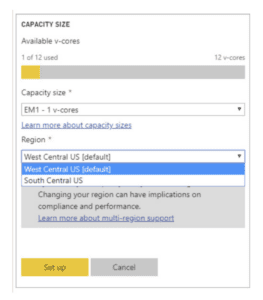

When deploying Power BI Premium Capacity, you can select which region you want the data center for your dedicated capacity to be located in. Minimizing the distance between your Snowflake and Power BI data centers can reduce latency and improve query performance.

If you are running both of them on Azure, selecting the same data center will result in the best performance for your Snowflake to Power BI connection.

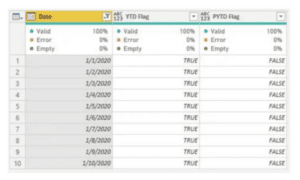

Sales YTD = CALCULATE( SUM([Sales]), [YTD Flag]=”TRUE” )

As discussed earlier in this guide, using DirectQuery in Power BI will result in lost DAX functionality, the biggest of these functions being Time Intelligence functions. We recommend that you use a dates table to get around this loss of functionality.

Having this dates table widely available to developers will ensure that the necessary fields are there to compensate for the loss of DAX.

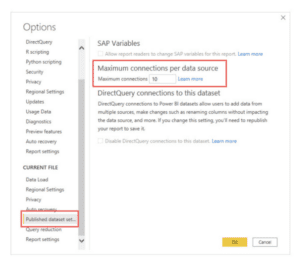

When using DirectQuery for your model, the default amount of concurrent queries that can run to the source is 10, but you can increase that to 30 in your Settings in Power BI Desktop.

In your Power BI Desktop report – go to File -> Options and settings -> Options -> Published dataset settings.

*Note: This does increase concurrent queries to the source system if your Snowflake instance is not appropriately sized for the queries being generated. If this is your case, this may not help your performance.

If you are using an On- PremisesGateway, you can adjust the number of mashup engine containers running simultaneously on the gateway. These containers are the functions running your queries, and are the same components that run in Power BI Service and Desktop.

The more mashup containers, the more queries you can concurrently run through the gateway. Finding a good balance here is critical as these mashup containers require resources and will affect the VM’s performance.

*Note: This does increase concurrent queries to the source system if your Snowflake instance is not appropriately sized for the queries being generated. If this is your case, this may not help your performance.

If you are using an On-Premises Gateway, you can adjust the number of mashup engine containers running simultaneously on the gateway. These containers are the functions running your queries, and are the same components that run in Power BI Service and Desktop.

The more mashup containers, the more queries you can concurrently run through the gateway. Finding a good balance here is critical as these mashup containers require resources and will affect the VM’s performance.

Here are the steps on how to change this setting:

In addition to the other strategies listed above, here are some more general tips for making sure that your Snowflake and Power BI experience is optimized.

If you are using Snowflake for your data warehousing needs and are now looking for a BI platform, make sure that you consider Power BI. The Microsoft Power Platform has been the Gartner Magic Quadrant Business Intelligence leader over the past several years.

Power BI’s cost, easy learning curve, and continued investment from Microsoft in new and innovative features make it a popular choice for enterprise organizations.

From a pricing standpoint, Power BI Pro licenses (used by developers to publish content) start at $9.99 per month per user, while Premium Capacity starts at $4,995 a month but depends on the number of developers and deployment capacity.

Organizations at the E5 level of Office get Pro licenses for free and Premium Per User (PPU) licenses for $10 a month per user (compared to $20 for non-E5). Because of its scale and integration with the other Microsoft tools, pricing on Power BI is hard to beat.

While there is not currently a direct connector for Snowflake within Power Apps or Power Automate, data flows can be configured within Dataverse using Azure Analysis Services to read from and write to Snowflake tables.

This allows customers to take advantage of the Power Platform tools to analyze data, build solutions, automate processes, and even build Microsoft Power Virtual Agents to handle requests without intervention. These additional tools in the Power Platform open up more possible consumption of Snowflake data than there would be otherwise.

Snowflake in Conjunction With Tabular Models

Reading and Writing to Snowflake Data Warehouse from Azure Databricks using Azure Data Factory

Other options include adding a custom connector to reach Snowflake by using CData’s Snowflake driver, although this will introduce additional costs.

One of the most attractive features for organizations moving to the Power Platform is the integration with the Office suite. Moving from Excel to Power BI is relatively easy considering that they are both Microsoft products and the syntax is very similar compared to other BI tools.

As more organizations move away from static reporting tools like Excel, Power BI is seen as a natural next step. For a very similar situation that we have worked on, check out the use case at the link below to find more information about how we transformed a Fortune 500 financial company’s reporting by transitioning them from Excel to using Snowflake and Power BI.

In this engagement, phData helped transition reporting that had been traditionally done in Excel to Power BI reports that were connected to Snowflake. This enabled their data engineering teams to create fast and efficient data pipelines that helped feed Power BI reports and eliminated hours of manual work to update Excel and CSV files.

Microsoft shows continual investment in the product and its user base by updating Power BI monthly. These updates include new features, improvements to existing ones (as requested by users), and additional data source connections.

phData’s Power Platform team stays up to date with the latest feature releases and additions to the Microsoft roadmap. See below for some of our blogs on recent Power BI release:

phData is one of Snowflake’s most trusted partners in North America – so much so that in 2022 phData was awarded Snowflake’s Americas Partner of the Year.

We are so incredibly grateful to be able to partner with an amazing company like Snowflake and help our common customers achieve their data goals. phData is committed to Snowflake and we firmly believe that Snowflake’s brightest days are still ahead.

During the past couple of years, phData has seen tremendous growth in its Power Platform Analytics business, which paved the way for us to be a Microsoft Gold Partner in 2022.

Our skilled team of Power Platform experts helps clients to implement, enable, enhance, and scale their current Power BI analytics infrastructure. If you are just starting out with Power BI or contemplating a Power BI deployment at your organization, make sure to read our Implementing Power BI Premium Capacity whitepaper.

With our deep commitment to Snowflake and our team of Power Platform experts, phData is uniquely positioned to help you and your organization take on the challenges of deploying Snowflake and Power BI to work in unison.

A fast-casual chain of restaurants (one of phData’s largest analytics clients) uses both Snowflake and Power BI to enable their enterprise reporting. These reports are sent from centralized BI to thousands of their restaurants on a daily basis informing them of metrics about their productivity, profit margin, and delivery effectiveness.

While the data models themselves are still housed within Power BI Service and maintained via Tabular Editor, all of the data pipelines and view creation still flow through Snowflake. The customer leverages the strengths of both platforms by allowing Power BI to support its enterprise reporting, while also letting Snowflake do all of the heavy lifting on the data model.

A major Consumer Packaged Goods (CPG) company, eager to dive deeper into Power BI Premium and enhance its reporting capabilities, faced a challenging task. The goal was not only to set up the necessary infrastructure to support comprehensive enterprise reporting, but also to seamlessly integrate Power BI with their existing Snowflake Data Cloud environment, enabling single sign-on (SSO) for dashboard users. Recognizing the complexity of this task, they turned to phData for a solution.

Understanding the challenges and objectives of the client, phData devised a tailored strategy. They closely collaborated with the client to deploy Power BI Desktop and Power BI Service across four crucial business groups dispersed over two continents.

As a result of phData’s solution, the client was not just equipped with a more potent Power BI Premium Capacity, but also gained the ability to view vital dashboards and construct their own ad hoc reports. Through this collaboration, the CPG company was able to take a significant step forward, enhancing its reporting and data capabilities across its multinational operations.

The future is bright for both Snowflake and Power BI. After a record-breaking IPO in 2020, Snowflake continues to see rapid growth and adoption in all markets and verticals while competitors like Redshift (AWS), Synapse (Azure), and Databricks are not seeing the same widespread adoption.

Microsoft has been leading the Gartner Magic Quadrant for BI tools for a few years running and there’s no reason to see that stopping any time soon. Microsoft continues to invest tons of money into the platform and releases new features every month.

By leveraging Power BI and Snowflake, our customers can accelerate their ability to generate insights, create powerful visualizations, and drive business decisions powered by Snowflake. And while there are nuances to using these two tools, Power BI is the data visualization platform of choice for many of our customers, and our potential customers who use Snowflake.

Looking to accelerate your data journey with Snowflake and Power BI? phData would love to help guide you to success. Reach out today for any questions, advice, and guidance.

Subscribe to our newsletter

Data Coach is our premium analytics training program with one-on-one coaching from renowned experts.