This blog was co-written by John Nowak and Troy Fokken

In our earlier post, we described the potential contained within HL7 data and highlighted some of the unique capabilities of the Snowflake Data Cloud that exist for working with this data in a revolutionary way.

In this blog, we’ll explore how phData uses Snowflake and Snowpark to transform longitudinal health records into actionable insights.

HL7 Solution Outline

Our HL7 processing solution contains three phases:

- Ingesting healthcare data into Snowflake in real-time

- Processing of raw HL7 messages in Snowpark into human-readable JSON data

- Flattening JSON objects into domain-specific data models for easy querying

Ingesting Data Into Snowflake in Real-Time

phData’s solution begins with the ingestion of raw HL7 messages into Snowflake. There are options to load data from a batch or post a stream of messages from a sending application in a real-time fashion. Snowflake offers Snowpipe as a turn-key tool to rapidly stage data, and be able to work with it inside the platform.

Processing of Raw HL7 Messages in Snowpark

Once the HL7 messages have been placed into a raw layer within the Snowflake database, we utilize the open-source HL7 Application Programming Interface (HAPI – pronounced “happy”)Java library inside of Snowpark.

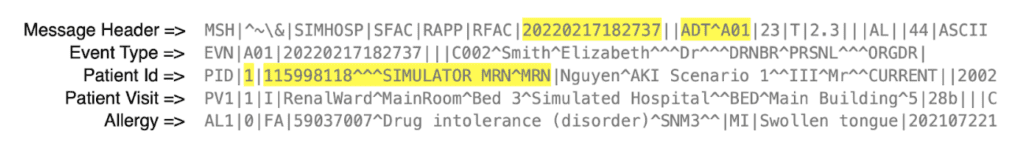

The magic that takes place at this stage is taking a semi-structured HL7 message from just line- and character-delimited fields, and parsing the data into an object which can be utilized for further processing and transformation. Excerpts of the processed message, showing the fields highlighted from the MSH and PID segments are shown below:

Attributes are referred to in their position within a segment. To work with the output in this form would still require a thorough understanding of the HL7 specification and the segments it defines. In order to make analytics engineering easier and approachable by a variety of engineers, we map all HL7 segments and fields from this more cryptic, positional format into human-readable descriptions instead:

The mapped output is stored in a separate layer in the Snowflake database as a VARIANT data type. This allows data engineers to use Snowflake’s unique semi-structured query syntax to flatten attributes into structured SQL tables.

Dynamic Data Model Creation

To go beyond working with this data in a user-friendly mapped JSON object, we built a dynamic field flattener. This field flattener will complete data profiling across an entire semi-structured dataset to recognize the object hierarchy and attributes within, taking the JSON output above and transforming it into a complete, workable data model of views like shown below:

These views are the source for any number of downstream analytics engineering activities.

Finally, dbt is used as the preferred tool to create reusable packages to model these messages and their segments into consumable datasets based on the business need.

Conclusion

We hope you enjoyed the closer look at our approach to taking and transforming HL7 messages from lines of text to valuable insights. The Snowflake Data Cloud along with phData’s solution is scalable to meet the demands of ingesting and processing hundreds to millions of HL7 messages each day into an essential piece of a healthcare data platform.

phData’s Data Engineering team has extensive experience designing, implementing, and supporting data products for healthcare customers. If you’re interested in a demo of phData’s HL7 solution on Snowflake, please contact us below!