A primer for scaling dbt while ensuring best practices

Scaling any system efficiently and effectively to the entire enterprise is always extremely difficult. This is true of any piece of technology. However, it is even more difficult when dealing with legacy data technology. Thanks to dbt, a new era of data transformation has been ushered in. Data teams are now more able to mimic the processes and patterns established by our software development counterparts.

However, even with everything dbt does, it is possible to run into scaling issues, which are often due to immature or misaligned processes. Avoiding these issues requires data teams to lean even more into the processes and patterns established in software development while understanding that continuing the paradigms that exist in legacy tools will propagate the scaling issues of our old systems.

In this whitepaper, we will lay out the common challenges organizations often encounter when trying to scale dbt to meet enterprise level demands. We will work through the various ways that organizations can address these challenges, including developing a Center of Excellence that will help drive adoption while maintaining the best practices that allow dbt to scale efficiently and effectively.

Common Challenges to Scale

Many of the challenges to scaling dbt often stem from a proof-of-concept (PoC) project that shows great potential. Over time, teams learn, grow, and improve but struggle to find time to address the issues and problematic patterns established by the PoC. As time moves forward, things continue to deteriorate until the benefits and capabilities of dbt, which are seen early on, appear to be overshadowed by process and tech debt.

As is the case with most tools, there are four primary issues at the core of this problem: a lack of standardization, a lack of ownership, inefficient workflows, and inadequate operational oversight. If these are not given intentional consideration and planning, these issues become increasingly detrimental to the adoption of dbt.

Lack of Standardization

A lack of standardization within the organization can cause massive sprawl within your data warehouse. Model names and project structures won’t be easy to understand, which will begin to hinder the new engineer’s ability to onboard and use dbt.

This leads to projects that are difficult to traverse and troubleshoot or, more importantly, difficult to maintain. This will result in wasted time spent addressing problems, leading to fatigue and burnout in your data team.

Lack of Ownership

Data teams may produce duplicative data assets that cause confusion on who owns that asset and who is responsible for improvements. Even worse, it’s possible that data teams produce assets that are ineffective, which can cause a cycle of avoidance when it comes to responsibility for the data set.

This lack of ownership will drive duplicative data assets that unnecessarily drive up computing and storage costs, as well as maintenance nightmares for your organization.

Inefficient Workflows

Inefficient workflows will result in a number of problems, from issues getting data approved and merged into the appropriate environment to problems with a cherry-picked merge that is now extremely difficult to reverse out of your environment.

Having inefficient workflows will waste the time of your data teams and drive frustration for both your data teams and your data consumers. Additionally, if there are transparency issues, this can begin to impact the ability of the organization to make decisions and hinder organizational agility.

Inadequate Operational Oversight

Missing operational oversight makes it extremely difficult to perform root cause analysis and address systemic issues that exist within the organization’s systems.

This lack of operational understanding can increase your cloud data warehouse costs and drive financial misuse of your system in a way that is difficult to understand and resolve. It is also difficult to find problems in the established process if there are no metrics to review and help identify where problems exist within the process.

How Does an Organization Avoid These Issues?

That is the purpose of this document. We will lay out how to address these problems within dbt in a way that makes achieving true enterprise scale and success attainable. Thanks to the paradigm shifts we are able to make with dbt, resolving these problems is significantly easier than in legacy tools and systems.

However, these steps are often overlooked or ignored, which results in an organization ending up back where they were with their old tooling. Stuck with inefficient processes, burnt out data teams, and unhappy data consumers, all while the organization continues to make decisions based on bad data.

Moreover, the scalability patterns implemented in dbt can and should be examined for all tools utilized across the data team ecosystem. Teams interact with a diverse range of tools beyond just dbt, implying that the processes and patterns established here should be evaluated for all tools employed within the organization.

dbt Conventions

Establishing standards and conventions within your organization serves several purposes. First, they offer clarity and facilitate communication regarding how teams and the organization as a whole should utilize dbt. Second, they enhance efficiency by streamlining processes and providing robust meta-testing capabilities, ultimately saving time during code reviews.

Furthermore, these conventions ensure consistency across projects and teams, simplifying contributions from individuals due to a uniform workflow, structure, and procedure. This consistency also fosters interoperability, enabling team members to troubleshoot and collaborate effectively across various dbt projects.

Finally, these conventions should be grounded in best practices to uphold quality assurance standards throughout the organization. It’s important to note that while these conventions should cater to the majority of the organization’s needs, there may be exceptions for edge cases.

To achieve this, organizations should begin by establishing conventions for project structure, model naming, model testing, and documentation. As a result, automating the enforcement of these conventions becomes feasible. Once these patterns are solidified and enforced, similar activities can be applied to template dbt Cloud Projects with Terraform.

Project Structure

The initial step involves setting up a project structure that aligns with the organization’s modeling paradigm. This serves as a guide for structuring and simplifies the process of establishing naming conventions for models. Furthermore, a well-designed project structure facilitates the efficient organization of layers within a dbt project within the data warehouse.

A prime illustration of this concept is:

Staging: Provides a location to stage our raw data, bringing in sources, renaming columns, and conforming data types.

Usually, little to no filtering is performed at this layer.

Intermediate: Provides a location to combine and clean up data, including filtering, joining, and complex calculations.

Marts: Provides the location to provide the final data model matching the paradigm used within the organization.

Presentation: (Optional) Provides a location to create potential secured views for the BI Presentation layer.

This results in a project structure that looks like:

├── models

│ ├── intermediate

│ │ └── finance

│ │ ├── int_payments_pivoted_to_orders.sql

│ │ └── int_payments_pivoted_to_orders.yml

│ ├── marts

│ │ ├── finance

│ │ │ ├── fct_orders.sql

│ │ │ ├── fct_orders.yml

│ │ │ ├── fct_payments.sql

│ │ │ └── fct_payments.yml

│ │ └── marketing

│ │ ├── dim_customers.sql

│ │ └── dim_customers.yml

│ ├── staging

│ │ ├── coffee_shop

│ │ │ ├── _coffee_shop__sources.yml

│ │ │ ├── stg_coffee_shop__customers.sql

│ │ │ ├── stg_coffee_shop__customers.yml

│ │ │ ├── stg_coffee_shop__orders.sql

│ │ │ └── stg_coffee_shop__orders.yml

│ │ └── stripe

│ │ ├── _stripe__sources.yml

│ │ ├── stg_stripe__payments.sql

│ │ └── stg_stripe__payments.yml

Data Vault

Certain organizations utilize structured modeling paradigms, such as Data Vault, which, while more complex, offer comprehensive frameworks. Implementing a paradigm like Data Vault may initially appear daunting, as it encompasses multiple stages, making it challenging to establish the project’s structure effectively.

Fortunately, this modeling approach features a tiered structure resembling:

Staging: Acts as the layer to stage our raw data source and generate the initial stages needed for the data vault.

Base Stage: Provides a location to stage our raw data, bringing in sources, renaming columns, and conforming data types.

Stage: This is where the base structures needed to feed into the Raw Vault, such as the hash keys and various other staged data.

Raw Vault: This is where the raw vault objects are stored

Hubs: Location to store the raw hubs

Links: Location to store the raw Links used to join hubs

Sats: Location to store the raw satellites for the hubs

Business Vault: Location to establish the business vault objects

Pit: Location to provide points in timetables

Bridge: Location to provide bridge tables

Marts: Provides the location to provide the final data model matching the paradigm used within the organization.

This results in a project structure like this:

├── models

│ ├── business_vault

│ │ ├── bridge

│ │ │ ├── bridge_geos.sql

│ │ │ ├── bridge_geos.yml

│ │ └── pit

│ │ ├── pit_customers.sql

│ │ └── pit_customers.yml

│ ├── marts

│ │ ├── fct_orders.sql

│ │ ├── fct_orders.yml

│ │ ├── fct_payments.sql

│ │ ├── fct_payments.yml

│ │ ├── dim_customers.sql

│ │ └── dim_customers.yml

│ ├── raw_vault

│ │ ├── hubs

│ │ │ ├── hub_customers.sql

│ │ │ ├── hub_customers.yml

│ │ │ ├── hub_orders.sql

│ │ │ ├── hub_orders.yml

│ │ │ ├── hub_payment.sql

│ │ │ └── hub_payment.yml

│ │ ├── links

│ │ │ ├── link_customers_orders.sql

│ │ │ ├── link_customers_orders.sql

│ │ │ ├── link_orders_payments.sql

│ │ │ └── link_orders_payments.yml

│ │ └── sats

│ │ ├── sat_customers.sql

│ │ ├── sat_customers.yml

│ │ ├── sat_orders.sql

│ │ ├── sat_orders.yml

│ │ ├── sat_payments.sql

│ │ └── sat_payments.yml

│ ├── staging

│ │ ├── base

│ │ │ ├── coffe_shop

│ │ │ │ ├── _coffee_shop__sources.yml

│ │ │ │ ├── base_stg_coffee_shop__customers.sql

│ │ │ │ ├── base_stg_coffee_shop__customers.yml

│ │ │ │ ├── base_stg_coffee_shop__orders.sql

│ │ │ │ └── base_stg_coffee_shop__orders.yml

│ │ │ └── stripe

│ │ │ ├── _stripe__sources.yml

│ │ │ ├── base_stg_stripe__payments.sql

│ │ │ └── base_stg_stripe__payments.yml

│ │ └── stage

│ │ ├── stg_customers.sql

│ │ ├── stg_customers.yml

│ │ ├── stg_orders.sql

│ │ ├── stg_orders.yml

│ │ ├── stg_payments.sql

│ │ └── stg_payments.yml

Data Mesh

Another critical consideration is whether the organization opts for a single dbt project or multiple projects. Both approaches offer distinct advantages and drawbacks. Consolidating the entire organization into one dbt project simplifies setup with minimal configuration required.

However, managing the git workflow and resolving conflicts can grow increasingly complex. Conversely, dividing projects into multiple repositories enables the organization to embrace the data mesh paradigm. This fosters streamlined workflows, empowering siloed teams to operate autonomously while facilitating transparency and the sharing of models developed by specialized experts.

The architecture of the data mesh should mirror the organization’s collaborative dynamics. For instance, if a centralized team provides staged and cleaned datasets to various siloed teams, their structure may differ as they distribute cleaned datasets to specialized teams. These specialized teams can then utilize the clean data for modeling, deriving insights to address diverse business queries, and sharing their findings across the organization.

Alternatively, some teams may remain entirely siloed, handling both data ingestion and modeling independently, with finalized data models shared with other teams as needed.

Project Configs

Another consideration is to examine how configs are applied throughout the organization’s dbt projects. The various configs are hierarchical, with the lowest granularity config winning. This means configs have a hierarchy based on config location and the specificity of the location.

Because of this, we recommend the following configuration process:

General configs for entire directories should be applied at the

dbt_project.ymllevelModel documentation, tests, and contracts should be stored within the property configs for a particular model

Overwriting the defaults applied at the

dbt_project.ymllevel should be added sparingly at the property level

Config block configs should be reserved for configs that add context to model development, such as unique keys for incremental models

Model Naming

With a structured project framework in place for the organization, establishing naming conventions that complement both the data warehouse and project structure becomes straightforward. Utilizing subdirectories aids in configuring how models are named and their locations (such as specific schemas or databases) and provides descriptive identifiers that reflect their purpose or function.

Hence, prefixes like base_stg_, stg_, int_, fct_, and dim_ serve as markers applied to model names. Taking this a step further, requiring stg_ models to incorporate the data source within their names enhances clarity both within the dbt project and the data warehouse environment, facilitating easier model identification and understanding.

Model Testing

Now that a project structure and model naming convention exist, the next step is to begin establishing and determining the appropriate testing process. This may leave you wondering why data testing is important.

Data engineering projects often operate on numerous assumptions, including the timeliness of data, the integrity of data models, and the accuracy of transformed data. Without testing these assumptions, we risk operating in ignorance or, worse, making the wrong business decisions.

Testing is essential to ensure the reliability and validity of our data assets. Without it, errors could proliferate unchecked, leading to potentially costly consequences. When data tests fail, it’s crucial to understand the underlying reasons. Failures may stem from various issues, including:

SQL discrepancies: The SQL code in your model may not perform as intended.

Incorrect assumptions about source data: Assumptions about the source data may be inaccurate or outdated.

Changes in source data: Changes in the source data may invalidate previous assumptions.

Analyzing test failures prompts reflection on potential changes in source data, model construction, or underlying data understanding.

Now that we’ve addressed the significance of data testing and the importance of performing root cause analysis on test failures, let’s outline a structured approach to building a testing-centric culture within your organization.

phData propose five phases of adoption:

Laying the Foundations: Basic tests around primary keys and foreign key relationships

Becoming Proactive: Implementing source freshness, statistical and regression tests to help monitor for data and model drift

Cultivating a Culture of Testing: This happens by enforcing and monitoring for tests to exist on all models.

Addressing Alert Fatigue: Tests can cause alert fatigue if the organization is not alerting on the appropriate errors. Create checks to see if tests are still needed over time

A great way to avoid alert fatigue is to have heavier testing on sources and final data models also, tagging regression models, and only having them run as a part of CI/CD

Achieving the “Goldilocks” Zone: When all data sets are appropriately tested and have the right tests to detect and prevent bad data from flowing through the pipeline. This often looks like heavy testing at the source and mart levels, with minor testing on the more intermediate layers.

Each phase represents a step towards establishing robust testing practices, particularly within the dbt framework. By understanding the characteristics of each phase, you can navigate towards optimizing your testing processes and achieving the desired Goldilocks Zone of testing effectiveness. But it all starts by establishing a Culture of Testing around what tests are expected on datasets.

Documentation

With an established project structure, standardized model naming conventions, and robust model testing procedures in place, the next critical aspect to consider is how your organization documents its models and datasets. This can be approached in several ways:

Single vs. Multiple Documentation Configuration: Decide whether each model should have its own YAML file containing documentation specific to that model or if there should be a single configuration file for an entire directory.

With a single documentation file for each model, it becomes extremely easy to make targeted changes as the documentation is housed in a single file targeted at a single model. However, this can cause file sprawl within dbt projects

With multiple documentations in a single file such as at the folder level, there won’t be any form of file sprawl. However, if that folder has a lot of models the single file may become difficult to manage and will increase the risk of merge conflicts.

Utilization of Markdown: Determine the organization’s strategy for leveraging Markdown to enrich model documentation, enhancing readability and clarity.

Required vs. Optional Documentation: Establish which documentation elements are mandatory, such as model documentation and documentation pertaining to keys versus other columns.

Once decisions regarding documentation practices for various models have been finalized, the subsequent step is to implement and enforce these standards and best practices consistently across the organization.

Standards Enforcement

With the organization’s standards in place, the subsequent phase involves strategizing the enforcement of these standards. This is where dbt proves invaluable, as it enables the organization to incorporate meta-testing, ensuring adherence to dbt best practices.

Implementing meta-testing simplifies the identification of deviations from established standards, empowering approvers and reviewers to pinpoint areas where best practices were not followed.

By testing for these best practices within transformation pipelines, dbt provides clear success or failure outcomes, streamlining the review process. This saves time and enables reviewers to allocate more attention to less routine and frequently overlooked standards.

Meta-Testing

There are two distinct methods for meta-testing: dbt_project_evaluator and dbt_meta_testing. The former utilizes the established dbt_project_evaluator, which aids in identifying common issues and potential performance bottlenecks across the dbt project.

The Project Evaluator extends its functionality by automating the testing of organizational standards and conventions, ensuring compliance through codified tests. On the other hand, dbt_meta_testing offers an advanced approach, enabling organizations to delve deeper into testing by examining various meta-artifacts and identifying areas where teams may deviate from best practices.

SQL Code Linting

Style guides play a crucial role in ensuring consistency and coherence in our code’s voice and structure. As the number of models increases, having a solid project structure alone is insufficient; a coding style guide becomes equally essential.

However, style guides often delve into fine details that can potentially hinder a project’s progress due to their subjective nature. Therefore, we recommend adopting one of the standard style guides and incorporating proper project linting to uphold code style.

Notable examples of such guides include dbt’s Style Guide, GitLab’s Style Guide, and Matt Mazur’s SQL Style Guide. Once a style guide is chosen, the subsequent step involves integrating linting into your workflow to enforce adherence to the selected guide using SqlFluff.

Shared Assets

The organization must address the question of how to distribute shared resources effectively. While data mesh patterns facilitate the establishment of project dependencies for sharing models among siloed teams, they do not inherently support the sharing of common macros and utilities.

These types of assets are ideally disseminated as packages installable across the organization, providing a centralized repository for shared resources. This approach ensures that all teams can access and utilize shared macros and utilities seamlessly.

Through data mesh and project dependencies, teams can monitor the usage of their assets across projects and anticipate potential disruptions caused by changes. Currently, the most effective method to manage shared macros is to employ tagging, ensuring that the repository is appropriately tagged to facilitate upgrades and testing by diverse teams. This allows for the identification and resolution of bugs or issues that may arise during the adoption of changes.

Unified Metrics

Once modeling conventions and development processes are in place, best practices enforced, and common assets shared effectively through project dependencies and packages, the next challenge to tackle may involve the numerous and diverse metrics scattered throughout an organization.

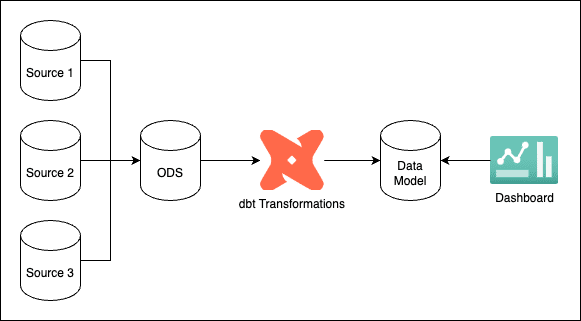

These disparate metrics and calculation processes can create friction among teams and executives as they strive to ascertain the accuracy of metrics. Fortunately, dbt offers a semantic layer that empowers cross-functional teams to define standardized metric calculation methods across the organization and centralize these calculations. This unified metrics layer facilitates swift and reliable business intelligence reporting, resulting in a federated transformation system that looks like:

Thanks to the Metrics Layer we established, there’s a centralized hub for all business tools to access and derive insights and value from the interconnected domains across the organization. All metrics are housed within a singular repository, leveraging project dependencies to connect to various domains. With this consolidation, the release and review of new metrics primarily become matters of governance and organizational processes, as all metrics are defined in YAML within a unified repository.

Terraform Templates

With all best practices now in place, the focus shifts to ensuring efficient deployment of new dbt Cloud projects while adhering to established standards. To achieve this, dbt Cloud offers a Terraform provider that can generate new projects or update current projects. Leveraging Terraform, the organization can establish new projects, configure baseline environments and connections, create templated jobs for CI/CD workflows, and implement role-based access controls for project management.

This streamlines the administration and creation of dbt Cloud instances, ensuring a seamless and efficient process. dbt even provides a tool that can be used to import starting setups for their dbtcloud-terraforming repository.

git Best Practices

Using dbt, we can apply Software Engineering best practices to our data pipelines. However, one challenge lies in scaling within git workflows and processes, which can be daunting for data teams, given the differences in RBAC and other controls across various git systems.

Here, we’ll outline overlooked git best practices. Implementing these features and processes will simplify and streamline team collaboration within git while also enhancing scalability through a template repository.

Branching Strategies

One often overlooked but crucial step is establishing a well-defined git branching strategy. A common and well-defined branching strategy can significantly enhance collaboration, streamline development processes, and ensure code quality. Different frameworks offer various approaches to managing aspects of your codebase, such as feature development, bug fixes, and release management.

When effectively implemented, you’ll experience:

Isolation of Features: New features can be developed and iterated independently, minimizing conflicts with the main production codebase.

Collaboration: The strategy should promote collaboration by providing a structured approach to contributions and reviews.

Release Management: The structured actions within branches should simplify the process of preparing and deploying new releases, ensuring stable and well-tested code pushes into production automatically.

Code Stability: Maintaining stability within the production-ready code while isolating experimental and in-progress work into their own development areas.

To best highlight these features and the various benefits, we will focus on talking through Feature Branch workflow and a more advanced branching strategy called Git Flow.

Feature Branch Workflow

In a feature branch-based workflow, the team adopts a strategy of minimal yet frequent branching. Each unique feature or bug is developed in isolation within its own branch, promoting isolation of work and facilitating easy testing of new functionality. However, if features have a long lifespan, merge conflicts may arise, which can be mitigated by regularly merging the main code back into the feature branch.

This workflow offers a straightforward approach, particularly within the context of dbt. The main branch contains the code deployed in production, while feature branches are developed within dedicated development environments. Upon merging into the main branch, CI/CD jobs automatically execute and test the feature branch’s code in a test environment for validity and quality assurance before integration. This streamlined workflow is well-suited for smaller teams of engineers.

Git Flow Workflow

Git Flow represents a sophisticated and robust workflow geared towards release engineering. It delineates a set of enduring branches—main, develop, and release—aimed at providing a structured framework for different development endeavors. In this workflow, hotfixes are branched directly from the main to facilitate swift deployment while development progresses through a comprehensive quality assurance cycle.

This approach facilitates isolated feature development while offering opportunities for holistic integration testing within the development branch. As features are consolidated and prepared for release, they transition to a release area for further testing with various business units. Upon approval, the code is tagged and merged into the main.

Although this workflow entails complexity and demands discipline in managing various branches, its longevity allows for extensive quality assessment of code and data models under development. Standard CI/CD processes can be executed to merge features into the development branch. Subsequently, integrated features undergo testing upon merging into the release branch, enabling user acceptance testing before final approval and deployment. This systematic approach ensures easy reversion of major breaking changes if needed.

Branch Protections

After determining a branching strategy, it’s essential to implement safeguards to prevent team members from inadvertently bypassing the established process. This is achieved by implementing branch protection policies that restrict Pull Requests or Merge Requests as the only means to merge code into protected branches.

Additionally, further restrictions can be applied to specify which branches can accept Pull Requests or Merge Requests. These measures serve to reinforce and safeguard your development process.

Pull Request Templates

On the topic of Pull Requests or Merge Requests, it is important to facilitate the information that needs to be provided to make the lives of the code approver easier. With templates, the organization can provide the expected information for the developer to fill out and provide to the approving team.

This makes it easier for the approvers to understand what work has been completed, what potential problems could exist, what testing and validation has occurred, etc. With a proper template, we make it easy and standardized so that our team members can contribute and document their changes effectively.

Pull Request Approval Process

Now that the team and organization have established a pull request template and branching process, it’s essential to address the approval process. Pull request approvals play a crucial role in the coding process, and thanks to our meta-testing and standards enforcement, identifying overlooked details is much more straightforward. It’s now time to define the approval process and set expectations for team leads and approvers.

The review process is typically iterative and follows these steps:

A team member submits a pull request, triggering a notification for the reviewer.

The reviewer can leave comments on the code, requesting changes if necessary.

The developer updates the pull request to address the comments.

Steps 2 and 3 may repeat until the code is deemed ready for approval.

Finally, the pull request is approved and ready to be merged.

The duration spent on steps 2 and 3 may vary based on the developer’s proficiency. It’s not uncommon for newer developers to spend more time in this area compared to their more experienced counterparts. The review process should be viewed as an opportunity for learning and potentially for teaching for the approver.

Code Ownership

As part of the approval process, it might be essential to safeguard specific sections of a project by mandating approvals from certain teams. For instance, updates to the dbt_project.yml could necessitate approval from a centralized platform team. Git platforms offer the option to create a code owner file, enabling the establishment of ownership and additional layers of approval within a pull request.

Typically, a code owner file might resemble the following:

##############################################################

#

# List of approvers/reviewers for Project

#

##############################################################

# These owners will be the default owners for everything in the repo.

* @team-leads

/models/metrics @analytics-team

.github/* @platforms-team

.sqlfluff @platforms-team

dbt_project.yml @platforms-team

packages.yml @platforms-team

Template Repository

Developing these diverse configurations, processes, and best practices may initially seem like a significant endeavor, prompting the question: How scalable is this?

Fortunately, across various git providers, we can capture all these patterns and processes we’ve established and encapsulate them as templates. These templates can then be utilized to swiftly create new projects, ensuring that all established patterns and best practices are automatically integrated. This allows you to invest effort in this work once and effortlessly replicate it for each new project, saving time on the repetitive implementation of these components.

Deployments & Pipelines

Now that we’ve covered dbt best practices and git let’s shift our focus to examining our pipeline and exploring the different deployment options available to us. With dbt, we can leverage a range of deployment processes commonly used by software engineers, which were previously inaccessible to data teams. In this section, we’ll clarify the various pipelines you can leverage and highlight optimizations that you might not be utilizing.

Regular Refresh

Regular refresh pipelines represent the most straightforward pipeline to leverage. These pipelines are deployed across the organization to periodically update data. Refreshing may align with warehouse downtimes to prevent concurrency issues or coincide with the availability of fresh data for processing. These jobs can be tightly coupled with data loading via an API call or scheduled using cron.

Continuous Integration/Continuous Deployment

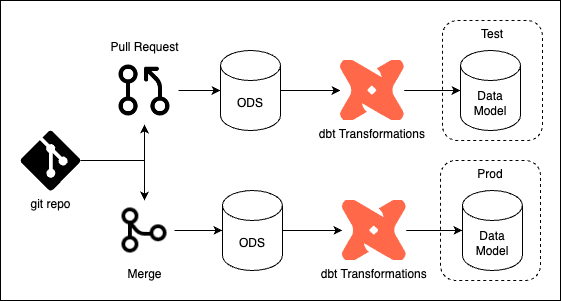

The subsequent level of pipeline that organizations should implement revolves around CI/CD. These pipelines are typically workflow-oriented and are linked to pull requests. This implies that when a pull request is initiated, a segment of the pipeline (either entirely or just the altered portion) will run in a non-production environment, notifying the approver of any potential integration issues based on the received success/failure message.

These pipelines may also include a deployment phase, where upon approval and merging of the code, the pipeline is triggered to deploy the latest code changes into the appropriate environment, determined by the branch it was merged into. These automated procedures enable organizations to merge code with confidence.

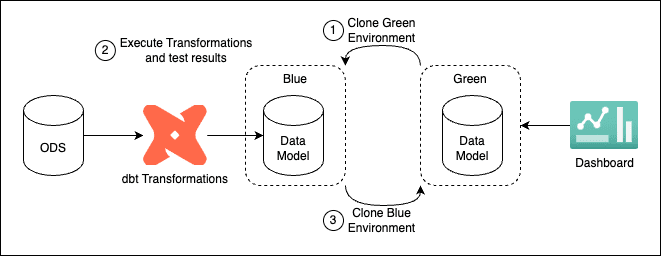

Blue-Green Deployments

Blue-Green deployments offer organizations a structured approach to deploying models. Initially, changes are implemented in a blue environment, where the data undergoes validation via automated tests. Once validated, the changes are merged into the green environment. This process is more intricate compared to other deployment methods, as it necessitates the existence of a mock non-production environment for code execution. However, it enables the business to continue operating on the old data version until the new version passes the expected data tests. This approach helps prevent downtimes and maintains trust in the organization’s data sets, as only validated data is published into the production environment.

This results in a pipeline that looks like this:

Clone Green Environment (Prod) into Blue Environment

Execute models and process fresh data into Blue Environment

Validate data using data tests

If all tests pass, Clone or Merge data in Blue Environment into Green Environment

Write-Audit-Publish Deployments

To extend the Blue-Green Deployment process further, Write-Audit-Publish (WAP) pipelines introduce human intervention into the loop. The concept behind a WAP pipeline is to initially write data into a non-production environment, conduct audits using data tests that require human approval, and upon receiving authorization, publish the data into the production environment. WAP pipelines are particularly suitable for critical data sets that warrant human involvement.

Typically, these pipelines are structured as follows:

Clone Production Environment into Non-Production Environment

Execute models and write fresh data into the Non-Production Environment

Validate data using data tests, and these results are then used by an individual to audit the results

If test results are expected and the data looks good, the publish step is approved, and data is either merged or cloned into production

As a pipeline evolves and the testing suite becomes more comprehensive, automated approval may become feasible. The decision to automate will hinge on the organization’s inclination toward full automation. When considering automation at different maturity levels, the following guidelines are recommended:

Low Maturity: These pipelines are relatively new with fewer tests. Regardless of whether they pass or fail, both a business user and a data engineer should audit the results.

Medium Maturity: These pipelines have progressed and now have a substantial test suite. Any failures should still be reviewed by both the business and a data engineer, but the engineer’s involvement may be reduced for successful executions.

High Maturity: At this stage, the pipeline boasts a comprehensive and effective test suite. Failures or warnings should be reviewed by the business, while a completely successful pipeline may be automatically deployed into production.

Pipeline Optimization

Having addressed several deployment options, it’s crucial to explore pipeline optimization within dbt. dbt offers a tool named SlimCI, which simplifies the identification and implementation of optimizations in your pipeline. SlimCI introduces stateful selection to pipeline executions, deviating from idempotency but delivering substantial cost savings when scaled.

Below, we’ll delve into a few existing optimizations:

State Selection

The state method in dbt is a highly potent selection tool. It enables you to choose sections of your pipeline depending on the type of alterations between two runs. These changes specifically relate to the code or its presence.

For instance:

state: new – Run any models that are new by looking for their unique ID in the previous run

state: modified – Run any models that are new or that have changed since the last run (there are a variety of sub-selectors that can be used with this as well)

state: old – Run any models that already existed in the previous execution

state: unmodified – Run any models that have not changed since the previous execution

These selectors allow for optimized selections within CI/CD pipelines to avoid execution and testing models that have no new enhancements.

Source Status Selection

The source status method in dbt enables organizations to skip running models that lack new or updated data. It achieves this by conducting source freshness tests on data sources, comparing them between the previous and current run. This approach not only alerts on stale sources but also bypasses any models without fresh data. This streamlines and optimizes our routine refresh process, preventing wasteful computation.

Result Selection

The resulting method in dbt facilitates execution based on previous pipeline outcomes. It allows for the identification of models that failed in previous runs, signaling them for re-execution. This capability enables smart reruns, avoiding the recomputation of models that have already succeeded.

Although it adds complexity, such as determining if a failure occurred, this can be easily managed with the dbt API’s rerun failed jobs endpoint. This endpoint first checks if there were any errors or failures in the previous job’s state. If so, it resumes execution from the point of failure. Otherwise, it executes the job afresh.

Other Orchestrators

A plethora of orchestrators is available for integration with dbt, serving to tightly couple transformations and deployment processes with other activities like data ingestion, dashboard refreshes, or model training. In contrast, dbt’s built-in orchestrator operates on the premise of a loosely coupled pipeline.

However, certain organizations may require tighter coupling of pipeline activities to enhance observability during executions. We will discuss how to augment the observability achievable within dbt by incorporating other artifacts produced by ingestion, orchestrators, and similar components.

Avoiding Job Sprawl

As the number of projects grows and diverse job requirements emerge, concerns may arise regarding the proliferation of single-use jobs. dbt offers a solution through model tagging, which can be applied at both the folder and model levels, with tags accumulating in an additive manner. This functionality enables the designation of refresh schedules using tags, simplifying the management of multiple models with a single job scheduled accordingly.

Observability & Operations

Simply implementing deployment processes and pipeline optimizations isn’t sufficient to create trusted and scalable transformations. As time progresses, data volumes may increase, leading to escalated computation costs, or assumptions about data velocity may change.

These issues aren’t always foreseeable upfront, underscoring the importance of establishing observability within dbt. Additionally, developing a FinOps process is crucial to ensure responsible spending and cost management.

Observability with dbt Artifacts

When a dbt job runs, it produces a diverse range of artifacts. These artifacts encompass various details about a specific dbt project and its invocation. They include information on project components, execution statistics, source freshness metrics, documentation, and more, all stored within extensive JSON files generated by dbt. These artifacts are instrumental in facilitating the optimization selections discussed earlier.

dbt Artifacts offer organizations visibility into dbt’s execution, timing, and methodology. This visibility is crucial as it enables teams to store this data in their data warehouse for monitoring and issue detection. Moreover, with the dbt Explorer showcasing some of this data, there are multiple avenues available for monitoring and troubleshooting when changes occur within the dbt pipeline, potentially leading to increased costs.

Augmenting Built-in Observability

Utilizing dbt enables the creation of a comprehensive data model that captures the executions conducted within the platform, leveraging the generated artifacts. However, this alone offers insight solely into the transformation pipeline. To attain a complete understanding of data operations throughout the organization, it’s crucial to produce analogous artifacts for pipeline executions, data ingestion tasks, dashboard refreshes, and other relevant activities.

Integrating these artifacts with those generated by dbt enhances observability across the entire system. Employing an orchestrator capable of storing results and statistics for these activities greatly facilitates the establishment of such observability.

Alerting & Operational Ownership

Once jobs are deployed and operational, the next step is to determine operational ownership. Operational ownership helps establish patterns and Service Level Agreements (SLAs) around operational support. This often starts by defining an alerting process, which can easily be accomplished by making use of dbt Webhooks to set up appropriate alerting based on errors. Establishing patterns that can be used to notify end users, as well as any on call engineers.

Alerting Best Practices

There are primarily two groups that need to be notified of issues within dbt: Producers and Consumers. Producers are the teams building data models, while Consumers are the analysts utilizing those models. Let’s start with Producers, who should have SLAs in place for issue resolution.

dbt offers built-in alerting functions to keep Producers informed of issues. The primary alerting options include email and Slack notifications. Email alerts allow team members to specify projects and jobs they want to be notified about. Slack alerts enable a single integration to notify a designated channel about job statuses. While useful, these alerts might not scale well for larger teams. For broader scalability and customized messaging beyond native integrations, dbt provides a webhook API. This API allows for sending alerts to various tools used by the organization, facilitating more effective and targeted communication.

With Producers receiving alerts through systems like PagerDuty, it’s essential to ensure transparency for Consumers. This can be achieved by building exposures. Exposures enable the Producer team to tag models and metrics used by specific dashboards or reports. These exposures can then be displayed as status tiles within the dashboard, providing data Consumers with real-time insights into any potential issues the team is addressing.

FinOps

As usage of the cloud data warehouse increases, causing expenses to rise, it becomes increasingly vital for teams to grasp the extent of their expenditure on data development and transformation. Particularly with the emergence of AI, many organizations aspire to leverage its disruptive potential within their sectors. However, AI initiatives can be prohibitively costly, making it challenging to justify implementation if data warehouse expenses are soaring.

Establishing a comprehensive FinOps process enables organizations to distinguish between healthy growth and uncontrolled, skyrocketing costs. In the referenced blog, there is an excellent example of how proper observability can significantly reduce wasteful consumption. A customer had migrated some legacy workloads to the cloud, which were driving up costs in their data warehouse. By implementing observability, the team identified numerous inefficiencies and ineffectively executed models within their dbt project. Additionally, they realized their warehouse was excessively large. With these insights, the team utilized SlimCI to optimize pipeline and model runs, drastically reducing job execution times. Furthermore, by optimizing and downsizing the warehouse, the organization was able to cut costs by 85%.

By implementing robust observability measures and monitoring pipelines for inefficiencies, teams can ensure responsible spending. This includes employing source status selection to avoid redundant model runs, utilizing stateful selection for CI/CD processes, implementing smart reruns to skip costly pipeline segments, identifying and addressing bottlenecks that contribute to idle time in the transformation pipeline, and evaluating the cost-effectiveness of materializations given the data volume. With a comprehensive FinOps process in place, teams can effectively curtail data expenses while maintaining responsible spending practices.

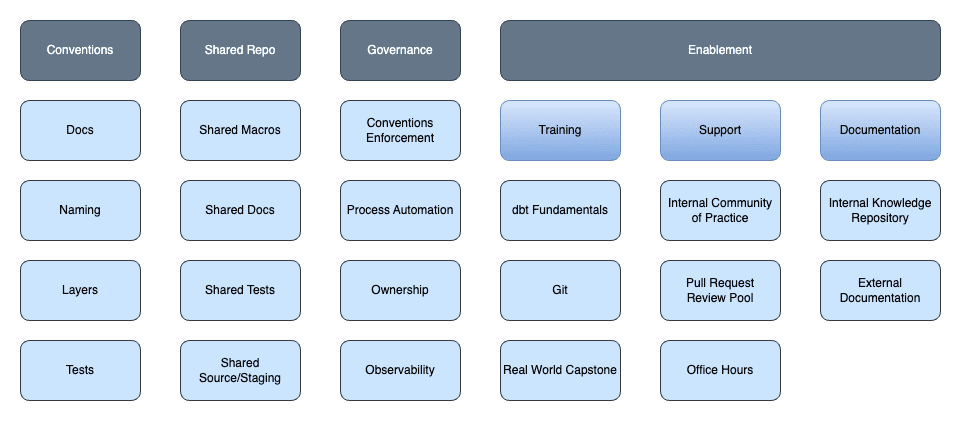

Creating a Center of Excellence

Now that operational and organizational best practices are in place, the final step is to establish a Center of Excellence (CoE). This CoE serves as a mechanism to foster understanding and adoption of the established best practices and paradigms within the organization.

While it’s simple to compile everything into a document and expect everyone to grasp it, some may find the language and concepts unfamiliar, leading to confusion. Establishing a robust CoE is essential for driving effective enablement throughout the organization, beginning with a core group of internal advocates.

Identifying Internal Advocates

In the early stages of dbt adoption, certain individuals will emerge as thought leaders and advocates. These individuals possess deep expertise not only in dbt but also in the established processes and paradigms, often because they were instrumental in shaping them. These internal advocates serve as experts who can lead the Center of Excellence (CoE), starting with enabling the organization.

As time progresses, these advocates not only facilitate the organization’s adoption of dbt and drive efficiency but also pinpoint areas where the established processes fall short. This feedback loop enables continuous improvement of the overall process across the organization. Advocates can identify specific teams or edge cases that require different levels of support or simplification while also providing the necessary empathy to ensure those teams feel understood.

Establishing Enablement

Enablement takes shape through various means. In the initial phases, internal advocates might offer open office hours to assist others in their dbt journey and ensure adherence to organizational best practices.

However, this approach can become burdensome, signaling the need for a more structured enablement approach across the organization. This may involve a range of enablement activities, including training sessions, communities of practice, and comprehensive documentation.

Training

Developing training programs can be challenging, but fortunately, dbt offers a wealth of training resources to support teams in getting started. However, during office hours, advocates should pay attention to recurring issues and questions that may indicate gaps in the existing training materials. This signals the need for organization-specific training to address these gaps. Team members can take the initiative to create training sessions tailored to the organization’s needs, helping to bridge the knowledge gap for employees. This contributes to building a robust onboarding process that ensures employees are well-equipped with the necessary skills and knowledge. While also reducing the burden of the office hours by replacing them with these skill-based training sessions.

In an ideal scenario, this training session serves as a practical capstone, offering team members hands-on experience with the processes and tools they use daily. This approach fosters those “ah-ha” moments as participants grasp how these tools and processes integrate seamlessly into their workflows. Additionally, it provides insights into existing automation and streamlining efforts aimed at simplifying their work processes.

Process Improvements

As teams adopt dbt and receive appropriate coaching, it is possible that overlooked patterns and processes will be discovered. This provides an opportunity to lean into these old processes and determine if what we’ve built effectively supports these processes or if we need to push back and focus on further training with teams.

For example, if the organization has chosen to pursue a Git Flow-based workflow but a particularly small team is constantly cherry-picking changes in the release cycle, That can be a sign to analyze why the team is doing this and identify if there is a better solution.

Maybe the team needs a simpler workflow separate from the rest of the organization, or maybe this is a sign of extended training this team needs. These issues should facilitate conversations to either improve the current processes or improve training for future cohorts.

Communities of Practice

Communities of Practice (CoP) offer a platform for like-minded individuals to engage in discussions on diverse topics. A well-structured CoP fosters an environment where team members can collaborate to solve complex challenges in creative ways.

Additionally, it serves as a forum for exchanging ideas and staying updated on the latest trends in technical tools. This sense of community nurtures a feeling of belonging within the organization. Moreover, CoPs play a role in identifying emerging advocates who can further promote the adoption of best practices and tools.

Additionally, Communities of Practice can serve as a valuable resource for identifying potential approval pools, guiding new teams in understanding best practices and acting as mentors during their dbt adoption journey. This collaborative approach is crucial for scaling teams’ skills across the organization effectively.

Discussion Forums and Chats

Within an organization, the emergence of forums or chat platforms often marks the initial formation of a Community of Practice (CoP). These platforms serve as repositories for documenting queries and solutions related to the organization’s tools and practices. Similarly, in the case of dbt, establishing a forum or centralized chat enables teams to exchange insights on patterns and current topics, all within a searchable platform.

As adoption increases, these forums and chats can become chaotic and disorganized. To address this, it’s crucial to invest time in defining support roles and setting expectations. Early establishment of key groups, such as admins, responsible for monitoring overall health, moderators who engage with users and address queries, and ambassadors who represent and advocate for the forums, helps maintain order and productive discussions within these platforms.

Internal User groups

The next phase involves establishing a user group aimed at fostering knowledge sharing within the organization. These user groups serve as platforms for members to exchange insights, discuss upcoming process changes, explore skill development opportunities, highlight achievements, and offer feedback. Typically held on a regular basis, such as monthly or quarterly, these sessions are led by internal advocates and follow a structured format:

Welcome and Announcements: Kick off the meeting with a welcome message and updates on recent changes and announcements.

Skill Development: Dedicate time to enhancing dbt skills within the organization, covering advanced features, or introducing new ones.

Success Stories: Share notable success stories from within the organization, showcasing accomplishments such as the development of high-value datasets or cost savings from pipeline optimizations.

Content Recognition: Recognize team members who have made significant contributions to the forum or have become prominent voices in the broader dbt community.

Open Feedback: Provide an opportunity for attendees to share feedback, ask questions, and engage in open discussion.

Each session should be recorded and shared with the organization to ensure that all members, including those unable to attend in person, can benefit from the insights shared during the user group meetings.

Internal Blogs or Newsletters

As user groups and internal chat channels expand, there might be a desire for an internal blog or newsletter. This creates a space to discuss the latest trends and challenges being addressed by teams. Moreover, it offers a platform for individuals to showcase and share their accomplishments within the organization, acknowledging the efforts of different teams. Organizations can share success stories, challenges, and valuable tips to illustrate the diverse applications of dbt throughout the organization.

Documentation

Setting up a documentation repository holds significant importance. While creating documents is straightforward, it’s essential to ensure they are regularly updated to reflect the latest insights and information. This upkeep requires dedicated attention, particularly in making the documentation easily searchable and comprehensible.

Conclusion

With established patterns, automated workflows, optimized deployments, and robust support from a Center of Excellence, the organization can gracefully scale with dbt, sidestepping common growing pains. These insights derive from repeated lessons and recurring questions. By laying this groundwork upfront, achieving scalability and efficiency will feel akin to a leisurely stroll through a park rather than a perpetual uphill struggle.