AI is evolving faster than anyone could have predicted. Its potential to streamline operations, enhance productivity, and solve complex problems continues to capture the interest (and budgets) of nearly every business.

As organizations navigate challenges like managing massive data streams and ensuring consistent customer experiences, the demand for scalable, effective solutions has never been greater.

In this landscape, integrating tools like ChatGPT isn’t just beneficial; it’s quickly becoming essential for businesses looking to maintain their edge or become industry leaders.

But what does its deployment at scale look like in real life? And what are the practical benefits and tradeoffs? This blog focuses on two hands-on examples:

Data processing and insights generation

Data pipeline integration

Read on to unpack ChatGPT’s potential benefits and limitations, clarify key implementation hurdles, and view a real-world example of how this technology can accelerate your workforce.

Our goal is to guide your business in leveraging ChatGPT more effectively to cut costs, save time, mitigate risks, and maximize business value.

To make things even more tangible, we’ll finish with a practical use case with functional code.

Understanding ChatGPT as a Strategic Asset

Before adopting ChatGPT at scale, it’s important to know what it is, how it works, and the benefits it brings to your business. ChatGPT processes natural language and generates human-like responses, making it a flexible tool for enterprises. Its core strengths include:

Pattern recognition across vast datasets

Summarizing and transforming unstructured text

Automating or even replacing manual, repetitive tasks

Seamlessly integrating into custom data pipelines

What are the Benefits of ChatGPT for Enterprises?

Data Processing and Insights Generation

ChatGPT can also be invaluable in transforming raw data into actionable insights. For enterprises dealing with large amounts of unstructured data, ChatGPT can:

Extract critical insights in seconds instead of hours

Summarize reports, reviews, or news articles

Highlight trends and anomalies—helping you spot risks or opportunities immediately

Example:

ChatGPT can classify customer review sentiment nearly instantly. Compare a time-consuming manual process with the following Python snippet:

import openai

# Set up OpenAI API key

openai.api_key = 'YOUR_API_KEY'

# Function to call OpenAI API for sentiment analysis

def get_sentiment(review):

response = openai.Completion.create(

engine='text-davinci-003',

prompt=f'Classify the sentiment of this review: "{review}"',

max_tokens=10

)

sentiment = response['choices'][0]['text'].strip()

return sentiment

# Example usage

review = "I absolutely love this product!"

print("Sentiment:", get_sentiment(review))

While basic, this highlights just how quickly ChatGPT can turn raw text into actionable insight.

Integrating into your Data Pipeline

Leverage the speed and capabilities of an LLM to process datasets that otherwise would be impossible given a time constraint. Consider that before these tools, much of the work had to be done by a human or a team, plus the results took time, always involved bias, and typically had at least a few errors and inconsistencies.

With the introduction of ChatGPT, we see most of these are gone.

Suppose your enterprise wants real-time awareness of its public image across dozens of media outlets. Traditionally, this would require multiple custom-built scrapers and heavy manual review. Today, ChatGPT can help:

Scrape and aggregate articles from several news sources

Extract consistent summaries, sentiment, and metadata

Feed insights into a reporting or analytics layer—ready for business action

Example:

Below is a trimmed-down walkthrough of this process, focusing on Apple Inc. coverage:

Apple is well-known globally and has been featured in most media outlets. We will define a series of sources and a simple scraping mechanism that feeds data into our pipeline so we can work on it.

We will compare a more conservative or traditional approach to something more modern and lightweight that leverages the power of ChatGPT.

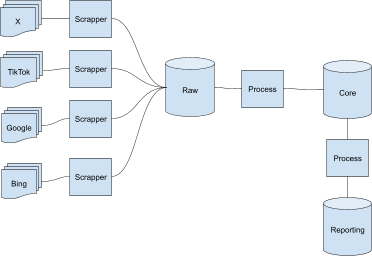

Imagine we code scrapers for each source based on each website structure, store, and process in various layers until we get a digested outcome that means something for our business:

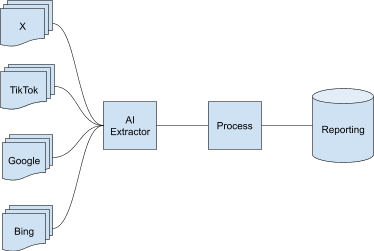

Compare this approach to this one, which uses an AI extractor to extract and transform data. Consider also a second step of transformation to get some insights, and finally, load the results into a reporting store. In our hands-on example, everything runs locally, so we will not use a real database or compute to store or process.

Sources for our Example

We will look at several news outlets to gather news and insights. The diagram above defines 4-5 different sources. We will only cover one in a generic fashion. This can be replicated by having several prompts specific to those sources.

The code will be written in Python and use the OpenAI gpt-4.1-nano model, which can be switched if necessary.

This is the example prompt I wrote, then I asked ChatGPT to help me make this prompt better for our use case:

Initial prompt

Get the top 10 news or articles that talk about Apple. They can include new products, reviews, or general outlets' opinions on the company. Format the response as JSON, and include a 150-word summary of the article with a particular focus on the general sentiment.

Improved prompt (with ChatGPT’s help):

Retrieve the top 10 recent news articles or reports about Apple Inc., including mentions of new products, reviews, or general opinions about the company. For each article, provide a JSON object with the following fields:\n- title: the headline of the article\n- source: the publication or outlet\n- date: publication date\n- url: link to the article\n- sentiment: overall sentiment towards Apple (e.g., positive, negative, neutral, interested)\n- summary: a concise 300-word summary of the article emphasizing the main points and the overall tone or sentiment expressed.\nEnsure the response is formatted as a JSON array containing these objects for all ten articles. Ensure that the JSON is valid and well-structured. Include a timestamp in the response to indicate when the data was retrieved. The JSON should look like this:\n\n[\n {\n \"title\": \"\",\n \"source\": \"\",\n \"date\": \"\",\n \"url\": \"\",\n \"sentiment\": \"\",\n \"summary\": \"\"\n },\n ...\n]\n\nMake sure to include a timestamp in the response indicating when the data was retrieved.

We see how better structured this is, and I didn’t spend a single moment defining the JSON structure or worrying about formatting; it just came out, and I used it directly. It worked like a charm. This can be categorized under prompt engineering, a topic out of scope but covered in depth in our Gen AI 101 Engineering 101 series.

This is the code that pulls news; it’s really just a call to Open AI’s API.

import openai

import json

from datetime import datetime

my_api_key = "" # 🔐 Replace with your actual API key

client = openai.OpenAI(api_key=my_api_key)

company = "Apple Inc."

prompt = "Retrieve the top 10 recent news articles..." # shorten for readibility

response = client.chat.completions.create(

model="gpt-4.1-nano", # or "gpt-3.5-turbo" if you prefer

messages=[

{"role": "system", "content": "You are a helpful assistant that returns JSON. Include a timestamp in the response. And include the company name this is for."},

{"role": "user", "content": prompt}

],

temperature=0.7,

)

response_json = response.choices[0].message.content

try:

data = json.loads(response_json)

except json.JSONDecodeError:

print("Failed to parse response as JSON:")

print(response_json)

exit(1)

# Save to a local file

filename = f"apple_news/chatgpt_output_{datetime.now().strftime('%Y%m%d_%H%M%S')}.json"

with open(filename, "w", encoding="utf-8") as f:

json.dump(data, f, indent=4)

print(f"JSON saved to {filename}")

This is how the output JSON looks:

{

"company": "Apple Inc.",

"retrieved_at": "2024-04-27T12:00:00Z",

"articles": [

{

"title": "Apple's New iPhone Launch Sparks Excitement in China",

"source": "TechDaily China",

"date": "2024-04-26",

"url": "https://techdailychina.com/apple-iphone-14-review",

"sentiment": "positive",

"summary": "The recent launch of Apple's iPhone 14 has generated significant buzz across China, with many praising its innovative features and improved performance. Reviewers highlight the enhanced camera system, faster processing speeds, and new design elements that appeal to Chinese consumers. Despite higher pricing, early sales figures indicate strong demand, especially among young professionals and tech enthusiasts. The article discusses Apple's strategic marketing efforts in China and how the new device is positioning itself amidst rising local competitors. Overall, the tone is optimistic, emphasizing Apple's ability to maintain its premium brand image and capture market share."

},

{

"title": "Mixed Reactions to Apple's Latest MacBook in Chinese Market",

"source": "Digital Trends China",

"date": "2024-04-25",

"url": "https://digitaltrendschina.com/apple-macbook-2024",

"sentiment": "neutral",

"summary": "Reviews of Apple's latest MacBook models in China present a mixed picture. Tech experts commend the device's sleek design and improved battery life but raise concerns about its high price point and limited local customization options. Consumers appreciate the performance boost, yet some express reservations about the pricing compared to local alternatives. The article notes that while Apple continues to hold a premium position, its market share growth in China appears to be slowing due to increased competition from domestic brands. The overall sentiment remains neutral as opinions vary based on user priorities."

},

{

"title": "Apple Faces Scrutiny Over Supply Chain Practices in China",

"source": "Economy Watch China",

"date": "2024-04-24",

"url": "https://economywatchchina.com/apple-supply-chain",

...

"summary": "Apple's collaborations with prominent Chinese tech firms, including local chipmakers and software developers, are fostering innovation and enabling tailored product features for Chinese consumers. The article emphasizes how these partnerships help Apple adapt to local market needs and regulatory environments, enhancing product compatibility and performance. Industry leaders see this as a strategic move to strengthen Apple's position in China amid fierce competition. The overall tone is positive, underscoring the benefits of localization and strategic alliances in maintaining Apple's competitive edge."

}

]

}

Example code for getting some insights:

import json

import pandas as pd

import os

from collections import Counter

from datetime import datetime

# Path to the folder containing JSON files

apple_folder = "apple_news"

all_data = []

if os.path.exists(apple_folder):

json_files = [f for f in os.listdir(apple_folder) if f.endswith('.json')]

for file in json_files:

file_path = os.path.join(apple_folder, file)

try:

with open(file_path, 'r') as f:

data = json.load(f)

company = data.get("company", "Unknown")

retrieved_at = data.get("retrieved_at", None)

for article in data.get("articles", []):

article["company"] = company

article["retrieved_at"] = retrieved_at

all_data.append(article)

except Exception as e:

print(f"Error reading {file}: {e}")

else:

print(f"Folder '{apple_folder}' does not exist!")

df = pd.DataFrame(all_data)

df['parsed_date'] = pd.to_datetime(df['date'])

# 1. Sentiment Count

sentiment_counts = Counter(df['sentiment'])

# 2. Most Recent Article Per Sentiment

latest_articles_by_sentiment = {}

for sentiment, group in df.groupby('sentiment'):

latest = group.sort_values('parsed_date', ascending=False).iloc[0]

latest_articles_by_sentiment[sentiment] = latest.to_dict()

# 3. Top Sources

source_counts = Counter(df['source'])

# 4. Sentiment Over Time (basic trend detection)

timeline_df = df.sort_values('parsed_date')

timeline = list(zip(timeline_df['date'], timeline_df['sentiment']))

# 5. Summary Insight Generator

def generate_insight():

total = len(df)

companies = df['company'].unique()

company_text = ', '.join(companies) if len(companies) <= 3 else f"{companies[0]} and {len(companies)-1} others"

pos = sentiment_counts.get("positive", 0)

neu = sentiment_counts.get("neutral", 0)

neg = sentiment_counts.get("negative", 0)

mixed = sentiment_counts.get("interested", 0)

insight = f"🔍 Sentiment Report for {company_text} ({total} articles)\n"

insight += f"- Positive: {pos} ({(pos/total)*100:.1f}%)\n"

insight += f"- Neutral: {neu} ({(neu/total)*100:.1f}%)\n"

insight += f"- Negative: {neg} ({(neg/total)*100:.1f}%)\n" if neg > 0 else ""

insight += f"- Interested (mixed): {mixed} ({(mixed/total)*100:.1f}%)\n" if mixed > 0 else ""

insight += f"\n📰 Top Sources:\n"

for source, count in source_counts.most_common(3):

insight += f"- {source}: {count} articles\n"

insight += f"\n📅 Recent Coverage by Sentiment:\n"

for sentiment, article in latest_articles_by_sentiment.items():

insight += f"- {sentiment.capitalize()}: \"{article['title']}\" ({article['date']})\n"

# Optional: detect tone shift

if len(timeline) >= 2:

first_sentiment = timeline[0][1]

last_sentiment = timeline[-1][1]

if first_sentiment != last_sentiment:

insight += f"\n⚠️ Tone shift detected from '{first_sentiment}' to '{last_sentiment}' over time.\n"

return insight

# Output result

report = generate_insight()

print(report)

Video demo

How do These Examples help Companies Scale?

Well, considering this was the first shot at the OpenAI api, leveraging ChatGPT and other AI tools for coding, I was able to build this whole example in 45 minutes.

Scale, one of the most valuable assets, is time, and it can be translated into other assets.

This is a simple example of a web scraper tool that would have taken much more time to set up if it had not used AI tools and if we had to implement every step of the process.

Just by creating the correct prompt, which I also got AI’s help polishing until I got the right result, you are all set with your Extract and Transform steps. Loading the data into a warehouse is not part of the scope of this post, but it is a given with all the available tools. We can just plug in any document database for this example, as we are getting JSON as a response, but it is as easy as asking if we want to change where we land the information.

Imagine you now need to put your data into a SQL Database—just ask! Rewrite the prompt to be specific about the database engine, format, and types, and you will have an insert script as output that you can then directly send to your database. The ease this represents for any pipeline is tremendous. Forget about switching technologies and having to rewrite your database layer logic. ChatGPT does this for us, and it is only getting better.

Limitations to Consider

While ChatGPT offers various benefits, there are limitations that you should be mindful of when scaling its use.

Accuracy and Reliability

ChatGPT generates responses based on patterns and probabilities derived from vast datasets, which are probably almost all publicly available information on the Internet. We call it artificial intelligence because, for now, it does not really think; it just makes some associations based on data.

This means that it can occasionally produce inaccurate or misleading information, especially in specialized or nuanced contexts. You must ensure that the AI’s outputs are validated before they’re used in business-critical applications.

Bias in AI Models

Like all AI models, ChatGPT is susceptible to biases in the data on which it was trained. These biases can manifest in various ways, such as favoring certain outcomes or perspectives over others. It is important to invest some time at the beginning of an integration to ensure that the results follow your company’s standards. If you train the model with your company data, there will be a footprint in the responses it gives you.

Data Privacy Concerns

To avoid exposing sensitive enterprise information, the ideal scenario would be to deploy a private instance. This can be on an on-premises server, cloud infrastructure, platform as a service, or directly on OpenAI private instances—whatever best fits your organization’s policies and technology.

Conclusion

Integrating ChatGPT at scale into your enterprise offers enormous potential to automate routine tasks, enhance customer support, and unlock valuable data insights. While we only covered two examples, the potential for ChatGPT is enormous.

Start unlocking the untapped potential of Generative AI!

If you’re looking to better harness AI to skyrocket productivity and efficiency at your organization, attend one of our free generative AI workshops to explore common AI use cases, strategies, and more.