Google Analytics is used to monitor website performance and gather important visitor data. If you’re reading this blog, you’re probably looking for a quick, easy-to-maintain, and low-cost method for moving data from Google Analytics into the Snowflake Data Cloud.

If that’s you, you’re in the right place!

In this blog, we will extract Google Analytics data in a very cost-effective way without writing any piece of code. We will build a data pipeline using AWS App Flow native connectors for Google Analytics and Snowflake Connect as source and destination respectively. Once the data is loaded, we will verify it using Snowflake queries.

Step 1: Enable Google Analytics API

The first step in moving your Google Analytics data is to access the analytics data. Google Analytics data can be accessed in the same way that every other Google API is, you just need to leverage the Google API console to manage applications and access Google Analytics data.

To do this, go to your Google API console and create a new project by giving the project a name. While creating your new project, you can associate your project with an organization.

On successful creation of your project within the Google API Console, a unique project ID is generated as shown in Figure-3. All your billing for individual services and API rate limits will be controlled and managed within this project context.

Step 2: Enable APIs & Service

The next step is to go to the APIs & Service option from the left pane and click on the Enable APIs & Service button to get access to the API library page. The search option on the API Library page will help you to locate the Google Analytics API. Alternatively, the category filter can also be used from the left pane to search the APIs.

Once the Google Analytics API is listed, select it and enable the API by clicking on the Enable button. This will allow it to be accessed via other applications and will permit the data extraction process to be triggered. Refer to Figure-6 and Figure-7 where Google Analytics API is enabled and the confirmation is displayed.

Step 3: External Application OAuth Consent

Once the Google Analytics API is enabled, our next step is to allow an external application OAuth Consent by providing the app name and email address.

Step 4: Add Scope

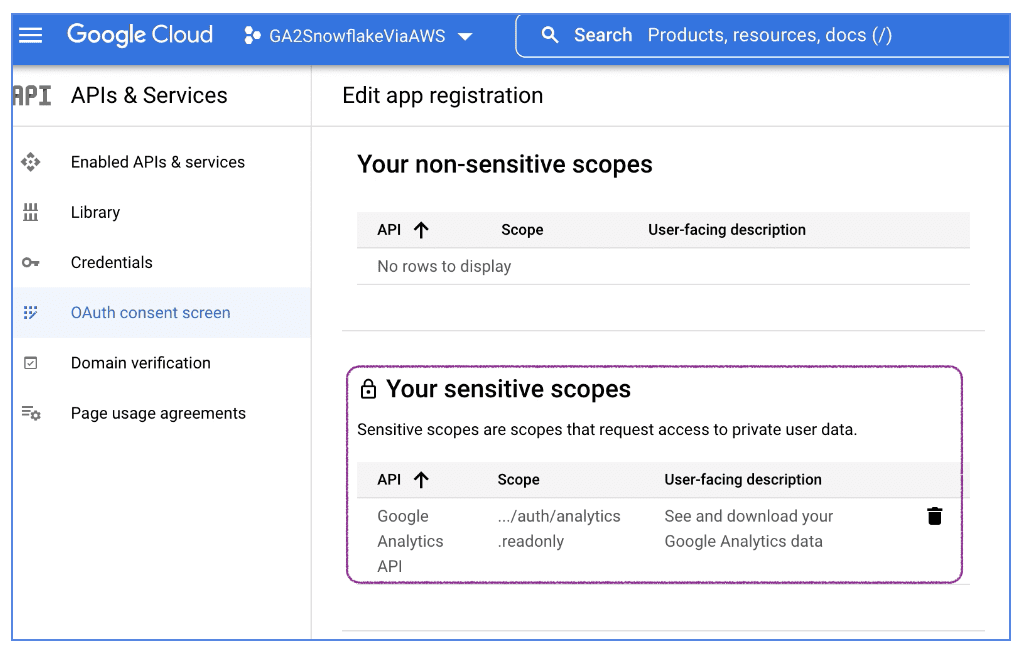

Once you save and continue, the next step is to add a scope, which helps external applications get permission to see and download Google Analytics data. Refer to Figure-10 to see which scope to select.

Once the permission is successfully granted, the scope will be added and listed on the page. Click on Save & Continue to complete the process.

Step 5: Add a Credential

The next step is to add a credential by creating an OAuth Client ID.

Also, be sure to add authorized JavaScript origin as per your AWS region. Refer to Figure-14 to see what the final URLs look like.

Upon the successful completion of the credential creation step, the client ID and client secret will be created by the Google API console.

Step 6: Register Google Analytics As Source With Amazon AppFlow

Now that we have successfully created a Google Analytics API client ID, it’s time to register it before it can be used by Amazon AppFlow to extract data from Google Analytics.

Amazon AppFlow has a Google Analytics connector built-in and can be selected from the available source connector listing. We need to configure the Google Analytics connector by providing the client ID and client secret key.

Once you select the Google Analytics connector, a new popup screen comes up and allows you to specify the client ID and client secret keys and register them.

Step 7: Register Snowflake As Destination With Amazon AppFlow

Once Google Analytics is configured as a source connector, it’s time to register Snowflake as a destination connector using the Amazon AppFlow built-in connectors.

To start, select Snowflake as the destination connector from the AppFlow console and provide Snowflake connection parameters. Once complete, register it.

Step 8: Extract Google Analytics Data Using Amazon AppFlow

Now that we have our Google Analytics API configured and registered with Amazon AppFlow connectors, it’s time to create a data pipeline to fetch data from Google Analytics. Amazon AppFlow comprises of five steps as shown below in Figure-20.

Step 1 allows us to assign a name to our data pipeline along with a description as an optional field. Step 2 helps us to choose Google Analytics as the source followed by a source connection. Google Analytics report object and S3 bucket parameters are shown in Figure-21 and Figure-22.

Once the source, connection, and target parameters are all set, the next step is to select the Google Analytics fields name along with field mapping and apply the formula if the basic transformation is needed as shown in Figure-23.

The last step is to add any filter, like date range, to narrow down the result from google analytics API.

Once all the configuration steps are done and submitted, a successful message appears and the data pipeline is ready to fetch data from Google Analytics to the S3 bucket.

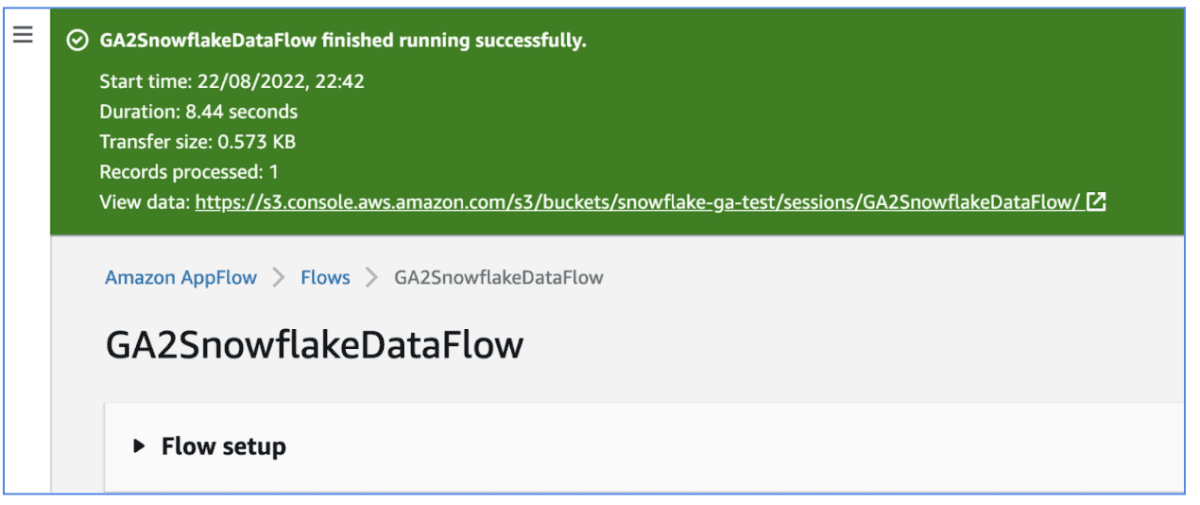

Click on the Run Flow button and the data extraction pipeline will trigger the job. It will take a while based on your data size and filter criteria (if applied). Once the job execution is finished and data is extracted, a success message will appear.

If you wish, you can also schedule the job and run it at regular intervals without any human intervention.

The AppFlow execution puts the data into the S3 bucket and this bucket is referred to as an external stage in your Snowflake environment.

Step 9: Access Google Analytics Data Into Snowflake

Now your S3 bucket should have Google Analytics data in a JSON format and your Snowflake instance has a named staged created linked to this S3 location. Next, let’s execute a list command and see all the JSON files extracted from Google Analytics. The result should look like Figure-26 below.

The extract of Google Analytics data is available in a JSON format and it is a nested JSON. It needs further flatting before it can be used as a standard table in Snowflake.

Snowflake SQL supports JSON attribute extraction using colon notation. By using this SQL construct, the Google Analytics data can now be parsed and converted into a table format for further analysis.

This is how Google Analytics data can be extracted from and ingested into a Snowflake data warehouse and by using colon notation, deep nested JSON can be parsed.

Snowflake’s native support for JSON and external named stage allows Google Analytics data to flow into Snowflake with ease. Once the analytics data is available in tabular format, it can be further combined with other datasets to get deeper insights.

Programmatic Approach To Extract Google Analytics Data

The Google Analytics data can also be extracted using Java or Python programming language and can be scheduled using a custom scheduler. However, this programmatic approach needs custom coding, exception handling, packaging/bundling, deployment, run-time infrastructure, monitoring, and additional operational overhead.

The approach followed in this blog is much simpler, easy to build, cost-effective, secure, and integration-friendly (S3 as external stages for Snowflake).

Tips To Remember

We have covered and learned a lot about loading data into Snowflake from Google Analytics. Here are a few pointers:

- While building the data integration pipeline with Google Analytics as a data source, understand the Google Analytics API rate limits and data processing latency to ensure that you are not extracting too much and too frequently.

- Google Analytics calculates certain combinations of dimensions and metrics on the fly. To extract a more accurate data set (sampling level), use the filter in your data pipeline while accessing and extracting Google Analytics.

- Snowflake external tables are not refreshed automatically, so to access all newly arrived google analytics JSON files, set the auto refresh flag to true.

- To extract deeply nested JSON elements, use Snowflake’s semi-structure data traversing capabilities and follow dot or bracket notation to access them.

- Snowflake’s flatten function can also be used to extract nested arrays within the Google Analytics data set.

Conclusion

We hope you got a clear understanding of how Google Analytics data can be loaded into Snowflake using external stages and how it can be further queried using Snowflake’s semi-structured query capabilities.

Looking to extract more value from your Snowflake investment? Learn how the experts at phData can help by exploring our Snowflake consulting services.