Google Analytics 4 (GA4) is a powerful tool for collecting and analyzing website and app data that many businesses rely heavily on to make informed business decisions.

However, there might be instances where you need to migrate the raw event data from GA4 to Snowflake for more in-depth analysis and business intelligence purposes. In this step-by-step guide, we will walk you through setting up a data ingestion pipeline using Azure Data Factory (ADF), Google BigQuery, and the Snowflake Data Cloud.

By the end of this tutorial, you’ll have a seamless pipeline that fetches and syncs your GA4 raw events data to Snowflake efficiently.

Overview

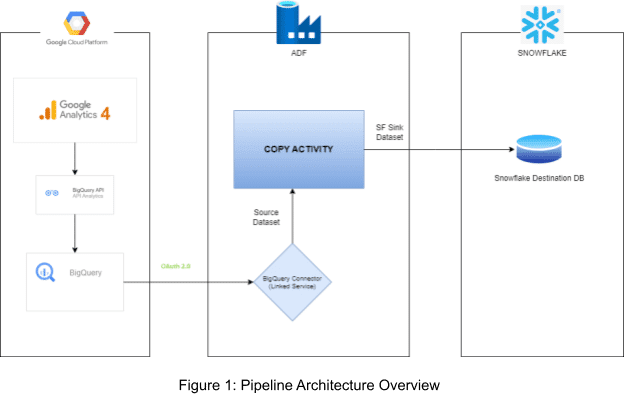

To achieve this data migration, we will use Azure Data Factory to orchestrate the process. Google BigQuery will act as an intermediate step to move data from Google Analytics 4 before it finally lands in Snowflake.

Let’s briefly look at the key components and their roles in this process:

Azure Data Factory (ADF): ADF will serve as our data orchestration and integration platform. It enables us to create, schedule, and monitor the data pipeline, ensuring seamless movement of data between the various sources and destinations.

Google BigQuery: BigQuery acts as an intermediate step between Google Analytics 4 and Snowflake. It allows us to extract raw event data from GA4 and temporarily store it for further processing before loading it into Snowflake.

Snowflake: Snowflake is our final destination, where the raw event data from Google Analytics 4 will be stored and made available for advanced analytics, reporting, and business intelligence purposes. Snowflake’s scalable architecture ensures high performance and efficient data handling.

GA4 Pipeline Architecture Overview

Before we dive into the technical details, let’s understand the high-level architecture of our data ingestion pipeline:

GA4 Data Export Setup: We’ll link the Google Analytics 4 property to BigQuery, enabling the export of raw event data.

Google Cloud Platform Setup: This step involves enabling the BigQuery API, setting up the environment, and obtaining oAuth 2.0 credentials.

Azure Data Factory’s Google BigQuery Connector Setup: We will create a linked service in ADF using the oAuth 2.0 credentials obtained in the previous step.

Copy Into Activity: We’ll use this activity to execute the query using the linked service connection to BigQuery, then fetch the data. Subsequently, it will Push the data to our destination, Snowflake.

UNNEST Data Fetch: Instead of flattening data after loading it into Snowflake, we’ll fetch UNNEST data from BigQuery directly using the query option in the COPY ACTIVITY source setting.

Sink Data to Snowflake: Lastly, we Push our data to the snowflake destination table.

How to Load Google Analytics 4 Dataset into Snowflake

Let’s now walk through the step-by-step process of migrating Google Analytics 4 raw event data to Snowflake using BigQuery and Azure Data Factory.

Step 1: GA4 Data Export & OAuth Credential Set-up

Step 1.1: Set up BigQuery Export

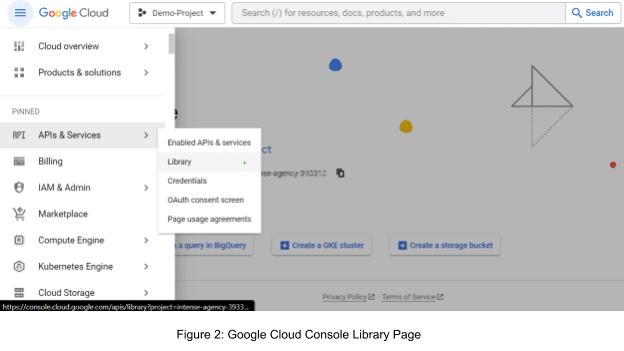

Go to your Google Cloud console, login, and open the Navigation menu.

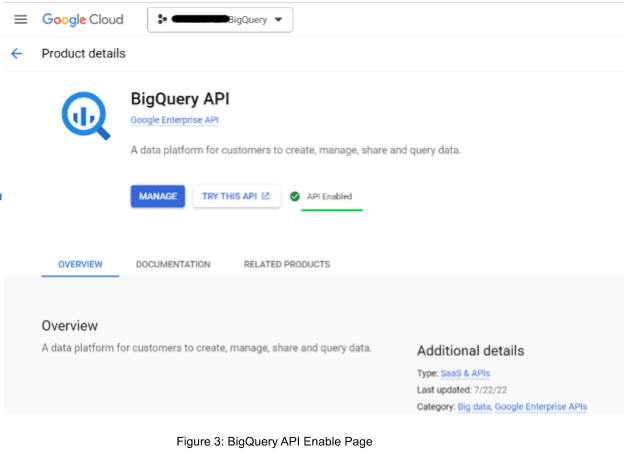

Click on “APIs & Services” and then “Library.”

-

Search for “BigQuery API” and enable it.

Step 1.2: Prepare Your Project for BigQuery Export

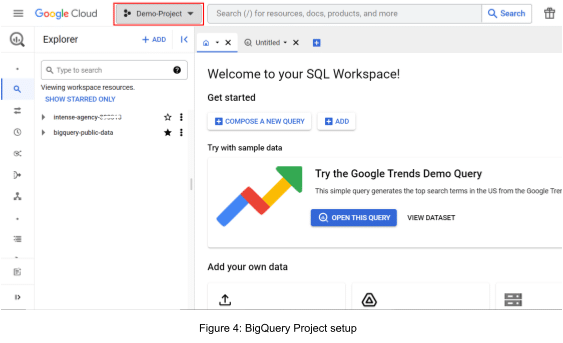

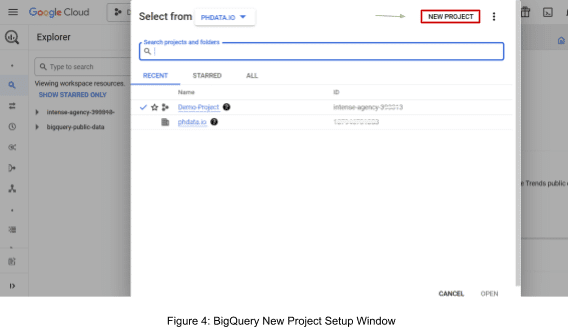

Go to your BigQuery console or dashboard and create or select an already existing project in which you want to export your data.

Step 1.3: Link a Google Analytics 4 Property to BigQuery

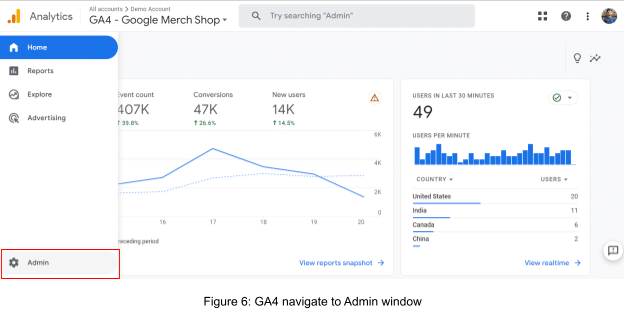

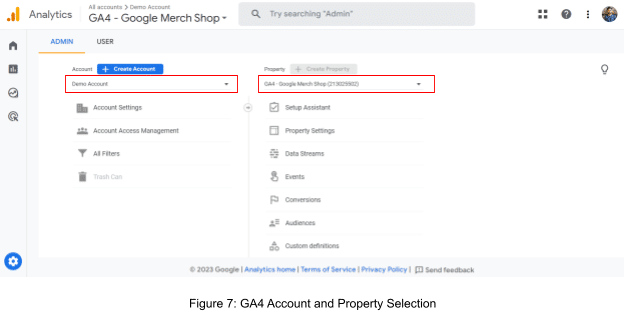

- In Google Analytics, click “Admin.”

-

Ensure you are in the correct account and property.

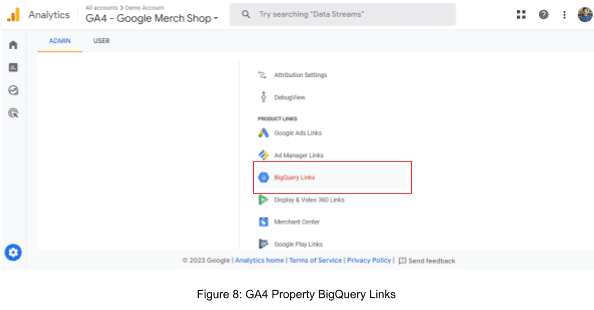

- Under “PRODUCT LINKS,” click “BigQuery Links”.

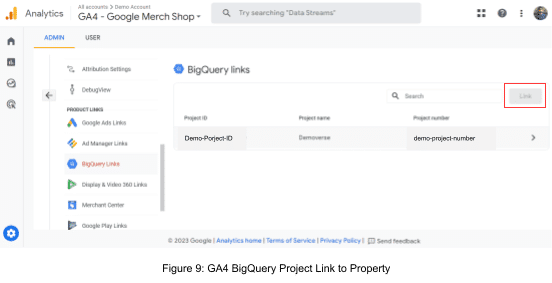

- Click “Link” and select a BigQuery project from the list. Click “Confirm.”

-

Select a location for the data. If your project already has a dataset for the Analytics property, you can’t configure this option. Click “Next.”

-

Click “Next.”

-

Select “configure data streams and events” to select which data streams to include with the export and specific events to exclude from the export. You can exclude events by either clicking “Add” to select from a list of existing events or by clicking “Specify event by name” to choose existing events by name or to specify event names that have yet to be collected on the property.

-

Click “Done.”

-

Select “Include advertising identifiers for mobile app streams” if you want to include advertising identifiers.

-

Select either or both a Daily (once a day) or Streaming (continuous) export of data.

-

Click “Next.”

-

Review your settings, then click” Submit.”

-

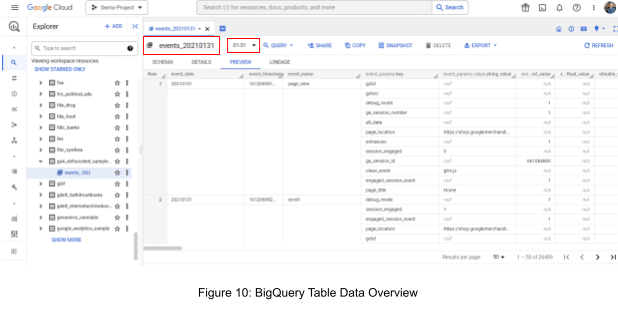

Check the export in the BigQuery Project. Data will be exported based on your setting as Daily or Streaming. Once data is available, it looks like this:

-

Note that currently data is nested. We will use the

UNNESTfunction to parse the data or create a new row against eachevent_param.key. -

For each Google Analytics 4 property and each Firebase project that is linked to BigQuery, a single dataset named

analytics_<property_id>is added to your BigQuery project. Property ID refers to your Analytics Property ID, which you can find in the property settings for your Google Analytics 4 property. -

Within each dataset, a table named

events_YYYYMMDDis created each day if the daily export option is enabled. If the streaming export option is enabled, a table namedevents_intraday_YYYYMMDDis created.

Step 1.4: Create OAuth 2.0 Client IDs

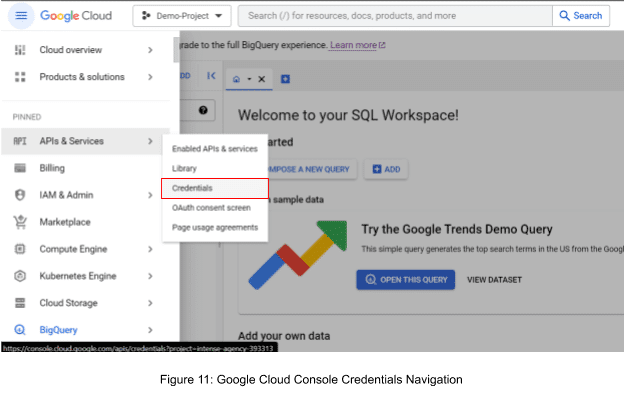

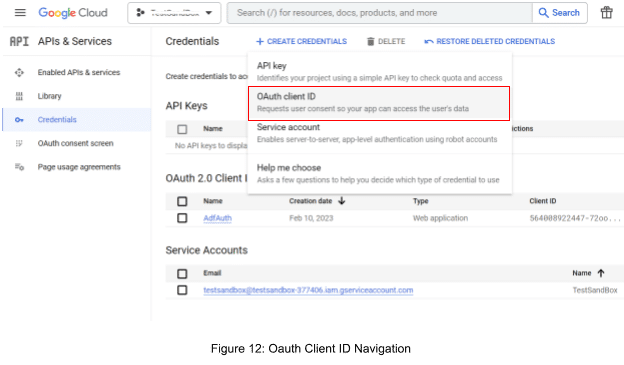

- Go to your Google Cloud console API dashboard and navigate to “Credentials.”

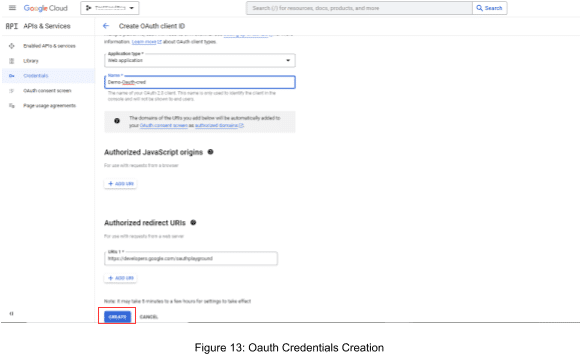

- Create new credentials, choosing “Web Application” as the app type since we’re using Azure Data Factory.

- Set “Authorized redirect URIs” to get the refresh token

(use https://developers.google.com/oauthplayground).

- Retrieve the credentials, including Client ID, Client Secret, and Refresh Token. (Use this Google developer oAuth playground to retrieve refresh token.)

Step 2: Azure Data Factory Pipeline Setup

ADF Pipeline creation: Create a new pipeline in Azure Data Factory with the name “ga4-bigquery-pipeline” or other name you deem appropriate.

COPY ACTIVITY setup: Select COPY ACTIVITY.

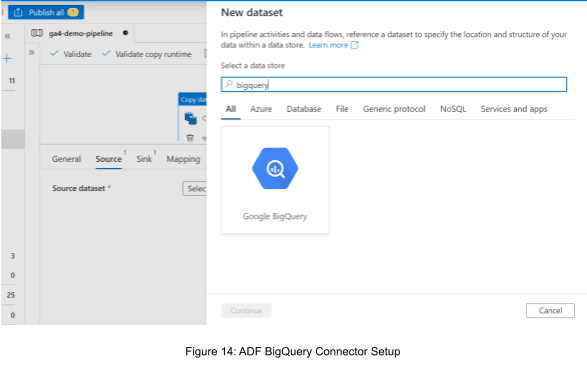

Source(BigQuery) Dataset setup:

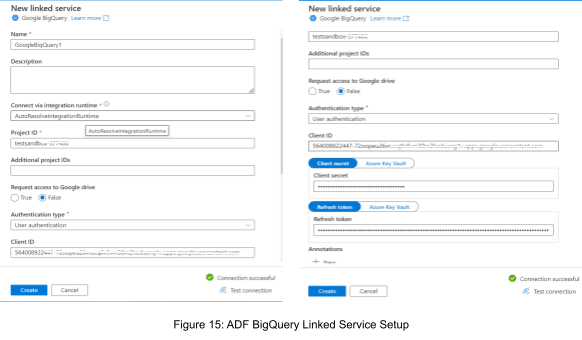

Create a new dataset using the Google BigQuery connector. Select the “Linked Service” option and create a new linked service.

Set up the Google BigQuery connector as a linked service by providing the Project ID and the oAuth 2.0 Client ID, Secret, and Refresh Token obtained in the earlier steps.

-

-

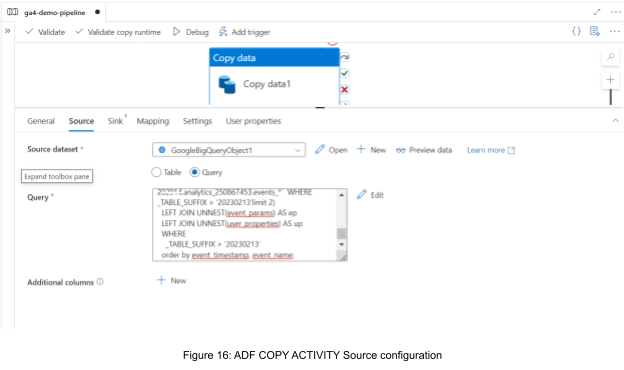

Now, we will use COPY ACTIVITY and select our BigQuery dataset as a source connection. We will use Query to use the UNNEST function on nested data or columns to retrieve UNNESTED data from BigQuery.

-

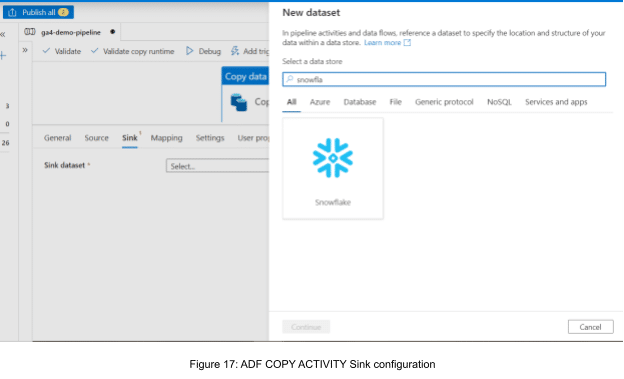

- Sink (Snowflake) Dataset setup: Create a Snowflake-linked service in Azure Data Factory, providing the necessary credentials to connect to your Snowflake instance.

Create a table with the table schema matching to your query, which we have set in the source dataset, and use the Mapping option in COPY ACTIVITY for mapping BigQuery Event table Columns to Snowflake Table columns.

Congratulations! You have completed all the setup. Now publish the ADF changes or test the pipeline by selecting the “Debug” option. It will actually execute the pipeline, and data will be loaded to the Snowflake destination table.

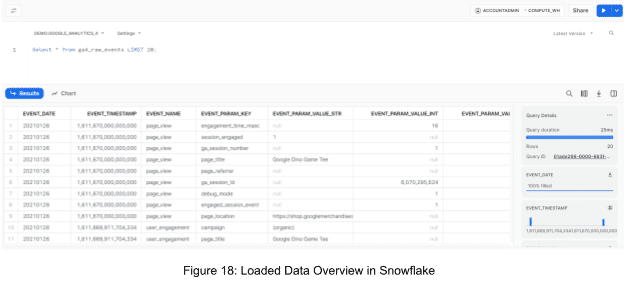

Step 3: Access GA4 Data In Snowflake

Finally, the data should be available in Snowflake once the ADF pipeline is triggered and completed successfully. Go to your Snowsite UI and open a worksheet. Select data from the table which we have set in the ADF Pipeline Sink Configuration.

Congratulations! You’ve now completed the initial setup for migrating your Google Analytics 4 raw event data to Snowflake using BigQuery and Azure Data Factory.

Key Takeaways

Respecting BigQuery API Rate Limits: When building your data integration pipeline with Google Analytics as a data source, it’s crucial to understand the API rate limits and data processing latency. Ensure that you are not extracting data too frequently or in excessive volumes to avoid hitting rate limits or causing data delays.

Utilizing Snowflake’s Flatten Function for Nested Arrays: Currently, in this guide, we have used the UNNEST Function provided by BigQuery; however, we can achieve the same using Snowflake’s “flatten” function, and it can be utilized to efficiently extract and unnest these nested arrays within the dataset.

Automating Daily Load to Snowflake: When automating the daily load, passing a dynamic table name to your query in the COPY ACTIVITY Source setting can help fetch incremental data only. To achieve this, you can use LOOKUP ACTIVITY to retrieve the last pipeline runtime and dynamically modify the query accordingly. To enhance the efficiency of your data pipeline, consider automating the daily data load to Snowflake. This can be achieved by adding a trigger to your Azure Data Factory (ADF) pipeline, ensuring that data is regularly and seamlessly synchronized.

- Monitoring and Alerting: Set up monitoring and alerting systems to proactively identify any issues or bottlenecks in the data pipeline. Monitoring data transfer rates, pipeline execution times, and resource utilization can help in timely troubleshooting and performance optimization.

Conclusion

After you have followed this step-by-step guide, you successfully have learned how to set up a data ingestion pipeline, leverage intermediate data storage, and finally land the data in Snowflake for advanced analytics and business intelligence purposes.

The integration of Google Analytics with Snowflake allows businesses to gain deeper insights from their website and app data, facilitating data-driven decision-making and enhancing overall business performance.

Overall, these powerful cloud-based tools provide a scalable and cost-effective solution for managing, processing, and analyzing large volumes of data from Google Analytics in Snowflake.

Need help setting up a data ingestion pipeline?

Definitions / Abbreviations:

Sink: In the context of data integration, a “sink” refers to the destination where data is loaded or written. In this blog, the “sink” refers to Snowflake, which is the final destination where the raw event data from Google Analytics 4 will be stored and made available for further analysis.

Source: In data integration, a “source” is the location from which data is extracted or read. In this blog, the “source” refers to Google BigQuery, which serves as an intermediate step to move data from Google Analytics 4 before loading it into Snowflake.

Data Ingestion: The process of importing or loading data from a source into a data storage or processing system is known as “data ingestion.” In this blog, data ingestion is the process of extracting raw event data from Google Analytics 4 and loading it into Snowflake via BigQuery and Azure Data Factory.

Azure Data Factory (ADF): Azure Data Factory is a cloud-based data integration service provided by Microsoft. It allows users to create data-driven workflows for orchestrating and automating data movement and data transformation processes.

Google BigQuery: Google BigQuery is a fully managed, serverless data warehouse and analytics platform provided by Google Cloud. It allows users to run SQL-like queries on large datasets and provides real-time analytics capabilities.

Snowflake: Snowflake is a cloud-based data warehousing platform that offers high performance, scalability, and ease of use for storing and analyzing large volumes of data. It provides a fully managed data warehouse as a service.