The components of an MLOps framework sound great, but what do they actually provide to teams on a day-to-day basis? It’s possible to talk at length about feature stores, how to deploy models into production, and the costs involved with doing so, but instead let’s take a look at what MLOps really means to an organization, and how it can change the way you do machine learning.

There’s one crucial question that must be asked of any machine learning project, and MLOps is no different: If this project is successful, how will it change our day-to-day business?

Life with MLOps by Role

For the rest of this post, we’ll explore what role each ML position plays in helping accelerate MLOps for a typical business. Each of the titles discussed below is based on the real-life work and routines of ML professionals. You’ll get a realistic look at what they do, how they help, and ultimately, what MLOps looks like in practice by role.

The Director

The Director begins the day the same way that they always do: making sure nothing is on fire and ensuring there are no impending disasters. As someone who is responsible for the organization’s entire machine learning program, there are plenty of things that can go wrong and there’s always the danger that critical information doesn’t get passed up the chain.

Just before the caffeine of their first cup of coffee kicks in, they pull up the “big board.” This display is where they view the statuses reported by the systems that monitor production models. Today, the Director saw that model usage was increasing, but the response times for the major models were still within the acceptable range.

Having a central operational status dashboard allows at-a-glance viewing of critical model health.

Centralized reporting and monitoring provides an at-a-glance overview of the current status. By incorporating automated alerting and escalation, both leadership and the team can gain peace of mind: if there are no alerts, things are running smoothly.

There was an alert for the customer loyalty model. It appears that there has been minor data drift. This model was trained on their registered customer base, but it appears that the demographic composition of new loyalty program members has shifted. There are recently more loyalty registrations for customers in the 25-35 year age range.

It’s not a cause for concern, and the Director will receive an email if the drift goes out of the acceptable range. The Director makes a note to share that the company is now seeing an uptick in loyalty registrations among younger customers at the next leadership meeting.

Data drift monitoring can detect problems before they affect model performance, but it can also detect anomalies and changes that are interesting in and of themselves.

It also appears that product-purchase data has been updated with new purchase records from customers. The new data triggered an update of the “propensity to buy” model’s measured accuracy. Recent model performance resulted in a 20 percent increase in audience targeting efficiency and has now saved $670k in the current fiscal year. The Director made a note of that too; they love sharing these metrics with leadership, but it was almost more rewarding to share them with the ML team and make sure that they understood the value of their work.

Closed-loop monitoring of production model accuracy is useful for performance monitoring, but it also provides a valuable look at the business value generated by your models.

The ML Team Lead

The team Lead has been very happy with the team as they continue to perform well and build momentum. Just before lunch, the Lead noticed that there were no major active alerts, only one model looked like it was starting to show drift, but it was nothing that needed immediate action. Looking at the model registry, they could see that 20 models had development activity and 10 of those models were updated versions of models currently in production. Some had passed all of their automated tests, and the automated model comparison showed that the new models performed better than their production counterparts. A few had been promoted to production and replaced the prior version; some of the other updates were scheduled for the next maintenance window, and some were set to require manual approval and ready for review.

With all the relevant metrics being displayed in the registry, the Lead decided to focus their time on research for possible projects to tackle next.

Model registries provide several critical functions. This includes enabling tracking of activity and centralize metric displays, while also providing valuable features for auditing and re-use of prior work.

The Data Scientist

The Data Scientist was busy with heads-down work developing a new model. They were working from the standard ML project template, checking in changes to their version-control system, and the model was almost complete. The project template provided several function stubs and placeholders for code, so the Data Scientist was confident that their new model’s code fulfilled all of the architecture and API requirements for production usage and testing.

Project templates make it easier to begin new work. Additionally, you can ensure that models conform to standard practices and will not be missing any critical functionality later down the line.

The news feed showed that there had been some updates to the feature store, and two of those affected the customer records that they were currently using in their new model. Their teammates had built a segmentation model which assigned segments to customers based on demographics.

The segmentation model went into production, which meant that the output of that model was fed back into the feature store. The segment assigned to each customer appeared to have predictive value for the new model; the data scientist decided to use the segmentation model’s output as an input to their new model. Initial testing on a sample of their customer base was promising, so the Data Scientist scheduled a full test run; if all goes as planned, the model will be in production by the end of the day.

Feature stores provide a place to centralize all of the primary data used to model data, but they also enable easy re-use of prior work. Derived features and the output of prior models can be added to the feature store as they are developed and immediately made available for use in new development.

The ML Engineer

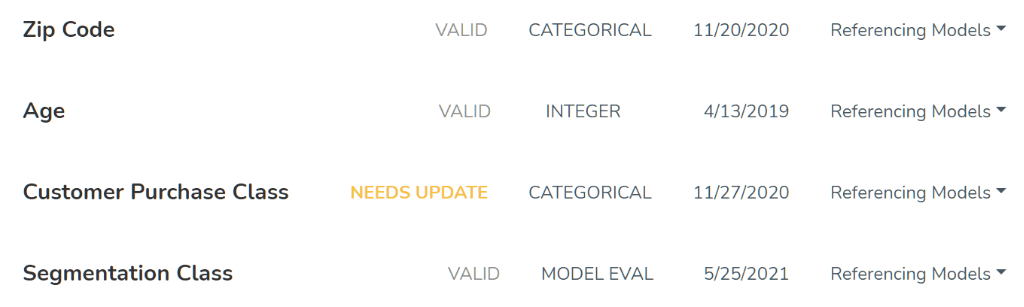

The ML Engineer (MLE) looked through recent activity and alerts. 10 new models passed their test suites and some went into production automatically. The MLE made a note to review the models that required manual approval. There was a minor data drift alert, but it had not yet reached the automated retraining threshold and could be set aside for later. The feature store showed a lot of activity: 14 new features had been added to the feature store. Nine of these were new derived features and five of the new features were outputs and predictions from other ML models.

The MLE received an alert from Data Engineering. The business had changed the values used in the CUST_PURCHASE_CLASS field (a particular field related to the type of customer purchase). That field was in use by some models; the MLE wrote a bit of code to map the new values back to the old values so that they would not have issues. The new values for that field were added to the feature store as a new feature, and the old feature was marked as deprecated; old models will function without interruption, but data scientists would be directed to use the new feature for future model development.

Later in the afternoon, the MLE received a new task from compliance. Regulators needed to ensure that a model did not rely on data about protected classes of potential customers. Automated testing showed no apparent bias, but more information was needed. The MLE pulled an audit report which showed the lineage for the primary and derived features for that model. The model had dependencies on one other model, so those features were included as well. The MLE included a note that they could pull a copy of the specific version of the data used for training and the predictions made by that model if needed.

An effective MLOps implementation can ensure that changes in underlying data definitions do not result in model disruption. Additionally, MLOps enables lineage and governance to track both the features used and the exact data consumed or produced by a model.

The CEO

The CEO hasn’t heard a peep out of the ML department since they received their new toy but they have heard a lot about increases in revenue and efficiency. The CEO wasn’t exactly sure about everything that went into MLOps, but whatever magic it performed seemed to keep that group happy and reduced the bandwidth required from the CEO.

The department leadership was reporting that concrete business value was being created and they were ready to scale up their machine learning program in a big way; the CEO made a note for the next board meeting about increasing the size of that group. As the CEO left for the day, they knew that their investors will be pleased to know that the company has machine learning in production, driving real business value.

This is just a peek into the operational capabilities and efficiency that is possible with MLOps. Ready to find out more?