Over the last few months I have been focused on doing various flavors of unsupervised learning on text data. I’m eager to share with you some of the fundamental differences that exist between clustering text and non-text data, as well as one major lesson I learned along the way that you won’t want to miss!

Text vs Non-Text Clustering: An Illustrative Example in User Segmentation

Clustering is a subset of unsupervised learning that is commonly leveraged across numerous use cases within data science. The value of grouping together similar entities can be powerful for problems ranging from demand forecasting to user segmentation — text mining is no exception. Despite similar fundamental objectives, there are important differences to be aware of between non-text and text-based clustering.

Let’s consider two situations for which user segmentation must be performed. In the first case, we have the following demographic data on the users: gender, age bucket, education, and state of residence. In the second case, we only have one piece of information: a resume.

Situation 1 : Non-Text Data

At the heart of any clustering problem is determining the similarity of the users to each other. Consider the demographic data of the three users above. Which user is most similar to User 1 — User 2 or User 3?

To answer this we can simply calculate the number of common values across the demographic data that Users 2 & 3 have with User 1. The more common values a user has with User 1, the more similar he/she is to User 1. User 2 is far more similar to User 1 than is User 3.

Situation 2 : Text Data

Determining similarity across users in situation 1 (no text data) is fairly simple as documented above, but how does this work when we only have the resumes for each user? Before thinking about clustering, data cleaning must be performed. This entails, but is certainly not limited to:

- Removing punctuations

- Tokenizing sentences to words

- Adding stop words

- Lemmatizing or stemming each word

- Creating N-grams

- Miscellaneous custom cleaning for dataset (HTML Tags, whitespaces, etc)

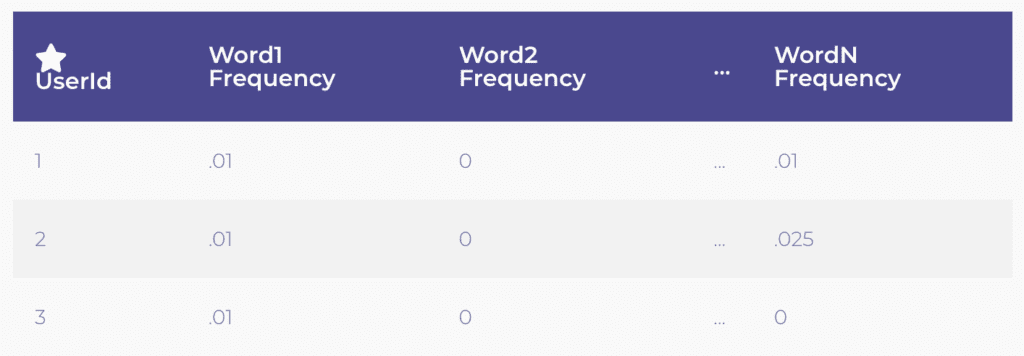

Once this is done, let’s think about how we can deem one user’s resume is similar to another user’s resume? The most intuitive approach would be to look at the frequency of words that show up across the resumes. Resumes that are most similar to each other will have similar word frequencies.

Difference #1 : Extracting Features for Text Clustering is Generally Less Straightforward

The calculation of the word frequencies in the table above is directly tied to the data cleaning. If one analyst only has minimal stop words and no n-grams created while pre-processing vs another analyst who creates extensive stop words and n-grams, the features clustered upon will be drastically different across the analysts. Hence the cluster results will differ between the two.

Difference #2 : The Number / Sparsity of Feature Space Clustered Upon

In situation 1 (non-text data), we had four features that were clustered upon. However in situation 2 (text data), the number of features is the number of unique words across all of the resumes for users 1, 2 and 3. You can also imagine that a lot of these words are only present in one or two of the resumes. Thus, many word frequencies would end up being 0. Determining similarity of users across this large and sparse matrix raises the issue of the curse of dimensionality: as we increase the number of dimensions (features), the volume of possible spaces that a user could occupy grows exponentially. Hence, trying to find distances/similarities (the basis of clustering) across larger dimensional spaces becomes quite difficult.

Key Lesson Learned: Iterative Modeling as a Mechanism for Data Cleaning

The last point I want to make is less of a difference across the two types of clustering and more a lesson on how iterative modeling and data cleaning is particularly invaluable for text clustering.

A common issue that can occur across both text and non-text clustering is having one cluster being a catch-all cluster.

One method of unsupervised learning that can be particularly prone to this issue is topic modelling (Latent Dirichlet allocation [LDA] being a commonly leveraged algorithm). Topics are groups of words that represent a common theme present across the analyzed documents. A catchall topic ends up having words that are seemingly unrelated to each other or words that are just adding noise.

These noise-creating words in the miscellaneous topic can actually be extracted and added to the data cleaning process as stop words. In doing so, we have effectively increased the signal to noise ratio for finding more optimal & meaningful clusters in future model iterations.

I hope that this post has provided some insights and useful takeaways as you explore the challenging but rewarding world of text clustering!